- Research

- Open access

- Published:

Revolutionizing breast ultrasound diagnostics with EfficientNet-B7 and Explainable AI

BMC Medical Imaging volume 24, Article number: 230 (2024)

Abstract

Breast cancer is a leading cause of mortality among women globally, necessitating precise classification of breast ultrasound images for early diagnosis and treatment. Traditional methods using CNN architectures such as VGG, ResNet, and DenseNet, though somewhat effective, often struggle with class imbalances and subtle texture variations, leading to reduced accuracy for minority classes such as malignant tumors. To address these issues, we propose a methodology that leverages EfficientNet-B7, a scalable CNN architecture, combined with advanced data augmentation techniques to enhance minority class representation and improve model robustness. Our approach involves fine-tuning EfficientNet-B7 on the BUSI dataset, implementing RandomHorizontalFlip, RandomRotation, and ColorJitter to balance the dataset and improve model robustness. The training process includes early stopping to prevent overfitting and optimize performance metrics. Additionally, we integrate Explainable AI (XAI) techniques, such as Grad-CAM, to enhance the interpretability and transparency of the model’s predictions, providing visual and quantitative insights into the features and regions of ultrasound images influencing classification outcomes. Our model achieves a classification accuracy of 99.14%, significantly outperforming existing CNN-based approaches in breast ultrasound image classification. The incorporation of XAI techniques enhances our understanding of the model’s decision-making process, thereby increasing its reliability and facilitating clinical adoption. This comprehensive framework offers a robust and interpretable tool for the early detection and diagnosis of breast cancer, advancing the capabilities of automated diagnostic systems and supporting clinical decision-making processes.

Introduction

Breast cancer remains a leading cause of cancer-related deaths among women globally, despite advancements in medical technology and screening techniques. Early and accurate detection is paramount for improving prognosis and treatment outcomes. Among various imaging modalities, breast ultrasound imaging is widely recognized for its non-invasive nature, real-time imaging capabilities, and safety, particularly for repetitive use without the risks associated with ionizing radiation. Ultrasound imaging is especially useful for differentiating between solid and cystic lesions and is often employed as an adjunct to mammography, especially in cases of dense breast tissues where mammography’s sensitivity is reduced. Breast cancer continues to be prevalent among women worldwide, prompting the need for advancements in diagnostic imaging techniques. Traditional imaging classification systems, primarily based on conventional CNN architectures like VGG, ResNet, and DenseNet, have shown promise but are limited by issues such as class imbalance and the subtlety of textural variations in ultrasound images. These challenges can lead to decreased diagnostic accuracy, especially for critical minority classes such as malignant tumors [1]. In response to these limitations, our study introduces the EfficientNet-B7 model, which is an advanced CNN architecture designed to optimize computing efficiency and accuracy through a balanced scaling of depth, width, and resolution of the network.

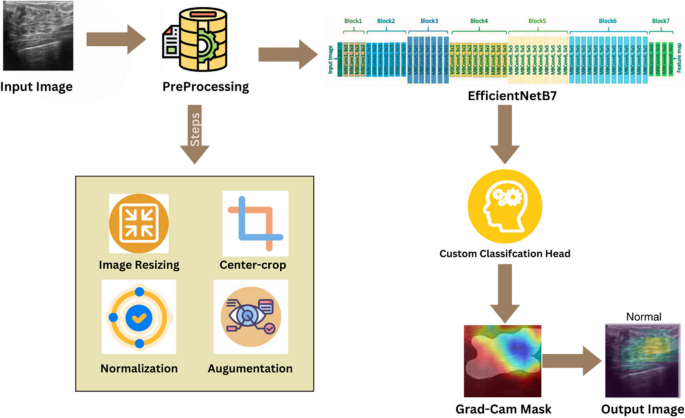

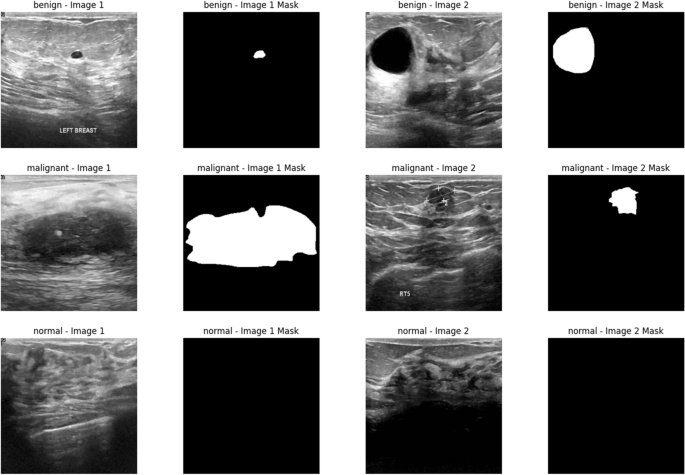

Moreover, the integration of Explainable AI (XAI) techniques like Gradient-weighted Class Activation Mapping (Grad-CAM) marks a significant enhancement in how models transparency is handled. XAI techniques allow clinicians and researchers to visualize which features in the ultrasound images influence the model’s predictions. This transparency is crucial for clinical acceptance, as it provides a deeper understanding of the model’s decision-making process, ensuring that these decisions are based on relevant clinical features rather than artifacts or biases in the data [2]. By incorporating these advanced methodologies, our study not only aims to enhance the accuracy of breast ultrasound classification but also strives to make these AI-driven tools more interpretable and trustworthy for clinical use. T\\ Fig. 1 shows Sample segmented images from the Breast Ultrasound Images Dataset (BUSI) showing examples of benign, malignant, and normal categories with their corresponding masks.

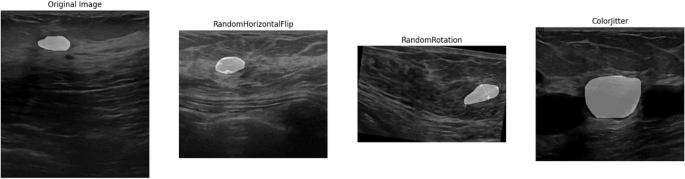

Diagnosing breast cancer through ultrasound involves analyzing lesion characteristics such as shape, margins, and echogenicity. However, the subjective nature of this analysis can lead to variability, highlighting the need for automated classification systems to improve consistency and accuracy. Convolutional Neural Networks (CNNs) like VGG, ResNet, and DenseNet have been successful in image classification but face challenges with the noise and variability in ultrasound images, as well as class imbalance, where malignant cases are often underrepresented [3]. To address these issues, our study uses advanced data augmentation techniques (e.g., RandomHorizontalFlip, RandomRotation, ColorJitter) to enhance dataset diversity and improve model generalization. We propose using EfficientNet, a state-of-the-art CNN known for its efficiency and scalability, which balances depth, width, and resolution, achieving high performance with fewer parameters. Fine-tuning EfficientNet-B7 on the BUSI dataset helps it learn specific features from breast ultrasound images, improving its ability to distinguish between benign, malignant, and normal cases. We also incorporate Explainable AI (XAI) methods like saliency maps and attention mechanisms to make the model’s predictions more transparent, helping clinicians validate and trust AI-driven diagnostics, which is crucial for reliable and interpretable medical tools [4, 5].

Motivation

The motivation behind this research stems from the critical need for accurate and reliable diagnostic tools in the early detection of breast cancer. Breast cancer is a major health concern, and timely diagnosis significantly improves the chances of successful treatment and survival. However, the variability and noise inherent in ultrasound images pose challenges for traditional classification methods, leading to the potential for misdiagnosis or delayed diagnosis. By enhancing the accuracy and robustness of automated classification systems, this research aims to support radiologists in making more informed decisions, ultimately improving patient outcomes [6]. The advancements in deep learning architectures, such as EfficientNet, and the availability of sophisticated data augmentation techniques provide a unique opportunity to address these challenges and develop more effective diagnostic tools.

This research paper contributes to the field of medical image classification by proposing an advanced model that leverages EfficientNet-B7 and targeted data augmentation techniques to improve the classification accuracy of breast ultrasound images. The key objectives of the research are as follows:

-

1.

Utilizing EfficientNet-B7, known for its efficiency and scalability, to create a model that can accurately classify breast ultrasound images into benign, malignant, and normal categories.

-

2.

Applying advanced data augmentation techniques to address class imbalances and improve the model’s ability to classify minority classes, particularly malignant lesions.

-

3.

Implementing early stopping criteria to prevent overfitting and ensure the model’s generalizability to new, unseen data.

The research paper is organized as follows: Related Work reviews existing literature on breast ultrasound image classification and discusses various CNN architectures and their limitations. Methodology describes the dataset, preprocessing, data augmentation, model architecture, training, and evaluation metrics. Results and Discussion present experimental results, visualizations, and weight analysis, followed by a discussion. Conclusion summarizes key findings, highlights improvements, and suggests future work. References list.

Related work

Traditional approaches to breast ultrasound image classification have relied heavily on manual feature extraction and classical machine learning algorithms, such as Support Vector Machines (SVM), k-Nearest Neighbors (k-NN), and Random Forests. These methods involve extracting features like texture, shape, and edges, which are then used to train classifiers. While they have had some success, they are often limited by their reliance on time-consuming and error-prone manual feature extraction [7], and the inherent variability and noise in ultrasound images can degrade performance. The advent of deep learning has transformed medical image analysis, with Convolutional Neural Networks (CNNs) becoming the leading architecture due to their ability to learn hierarchical features directly from raw images. Notable CNN architectures like VGG, ResNet, and DenseNet have significantly outperformed traditional methods. VGG networks, with their deep architecture, ResNet with its residual learning, and DenseNet with its feature reuse, have shown superior accuracy and robustness in image classification [8]. However, challenges remain, including class imbalance in medical datasets, where malignant cases are often underrepresented, leading to biased models that perform poorly on minority classes. Additionally, noise and variability in ultrasound images complicate the learning process, and deep learning models are computationally intensive, requiring substantial resources and time [9]. Recent advancements aim to address these challenges with techniques like advanced data augmentation (e.g., RandomHorizontalFlip, RandomRotation, and ColorJitter), and efficient architectures such as EfficientNet, which balances network depth, width, and resolution for improved performance with fewer parameters. Fine-tuning pre-trained models and using early stopping to prevent overfitting further enhance model performance [10]. Explainable AI (XAI) methods like Grad-CAM are also being integrated to provide visual explanations of model predictions, helping clinicians understand which parts of the image influence diagnostic decisions, thereby improving trust and clinical applicability. Table 1 summarizes studies evaluating deep learning and traditional methods for breast ultrasound image classification.

The related work section traces the development of breast ultrasound image classification, starting with traditional methods like SVM and k-NN that rely on manually extracting features. It then moves to advanced techniques using deep learning models such as CNNs and EfficientNet. It discusses challenges like class imbalances and image variability and highlights improvements like data augmentation, compound scaling in EfficientNet, and Explainable AI (XAI) methods such as Grad-CAM, which boost model performance, robustness, and clarity. Recent studies show that these modern approaches are effective in enhancing breast cancer diagnosis.

Our study introduces new methods for early breast disease detection using advanced deep learning-based segmentation algorithms. By combining EfficientNet-B7 with XAI techniques as of 2023, our approach offers a cutting-edge solution for automating disease diagnosis from ultrasound images. EfficientNet-B7 is efficient and scalable, making it better suited for detailed medical image analysis than older models like VGG or ResNet. We also use advanced data augmentation techniques to address class imbalances, improving the model’s ability to handle different imaging conditions and enhancing early disease detection. Additionally, Grad-CAM provides clear, visual explanations of the model’s decisions, helping clinicians understand which features of the ultrasound images are most important for diagnosing malignancies. This transparency sets our approach apart and enhances its practical use and trustworthiness in clinical settings.

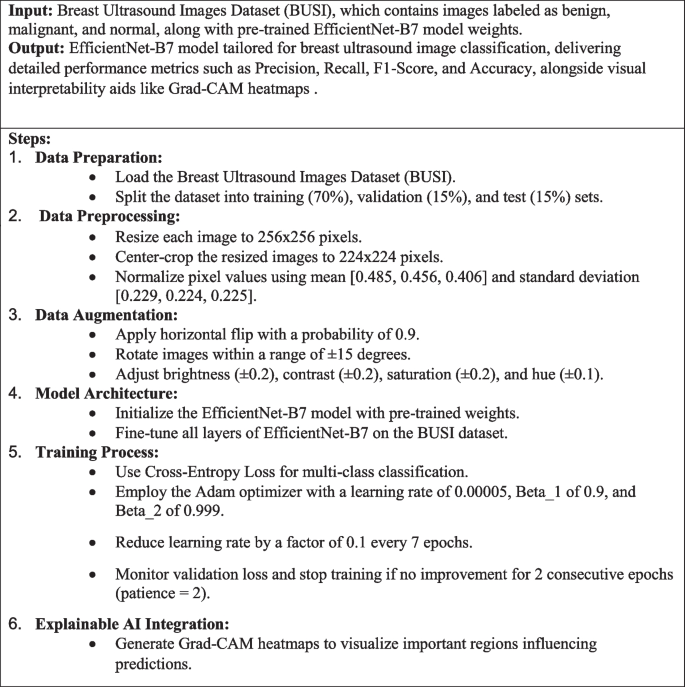

Methodology

This study uses advanced deep learning techniques to classify breast ultrasound images into benign, malignant, and normal categories with the help of the EfficientNet-B7 model. To improve accuracy, especially for less common cases, the images are enhanced through techniques like random flipping, rotation, and color adjustments. The entire model is fine-tuned to suit the specifics of ultrasound images, and training is carefully monitored to stop before overfitting occurs. Unlike traditional methods that rely on manual or basic selection of important image frames, this approach uses deep learning to automatically focus on the most critical areas for diagnosis. Additionally, Explainable AI techniques, such as Grad-CAM, are used to visually confirm the model’s decision-making process, making the results more understandable and trustworthy. Figure 2 shows the overview of the proposed methodology leveraging EfficientNet-B7 for breast ultrasound image classification.

Dataset description

In this study, Breast Ultrasound Images Dataset (Dataset_BUSI_with_GT), which contains a total of 780 images divided into three categories: benign, malignant, and normal. Each image is paired with a corresponding mask image that delineates the regions of interest, essential for accurate lesion localization and classification. The dataset was meticulously partitioned into training (70%), validation (15%), and test (15%) sets, ensuring a balanced representation of each class across all subsets. During preprocessing, images were carefully resized to 256 × 256 pixels and then center-cropped to 224 × 224 pixels to standardize the input size for the EfficientNet-B7 model. Additionally, the dataset underwent a thorough augmentation process, particularly enhancing the minority classes (malignant and normal) with transformations such as random horizontal flips, rotations, and color jittering, to improve model robustness against variations in image presentation. This comprehensive dataset preparation ensures that the model is trained on a diverse and representative set of images, promoting better generalization and performance in clinical applications. Table 2 shows the number of images and augmentation techniques applied to each category in the dataset. Figure 3 shows Sample images from the Breast Ultrasound Images Dataset (BUSI) showing benign, malignant, and normal categories along with their corresponding masks.

Data preprocessing

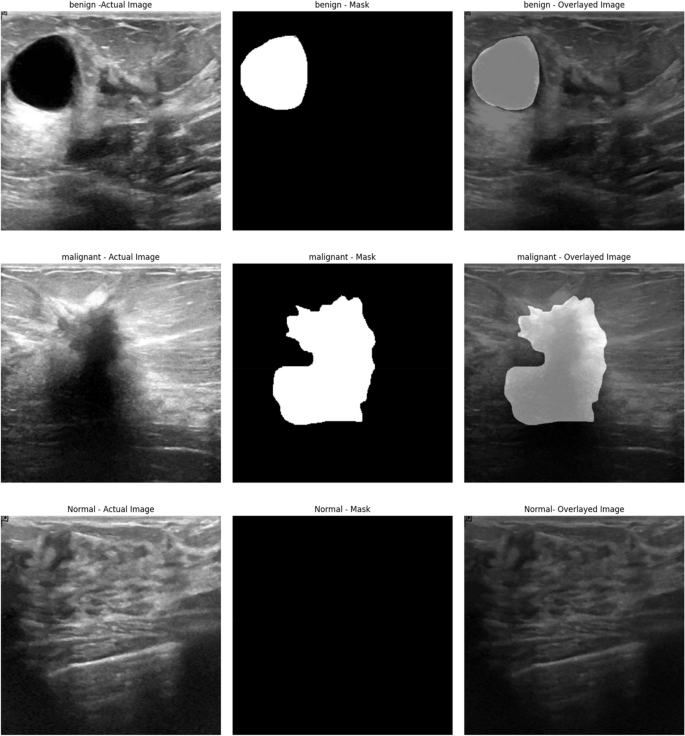

In preparing the breast ultrasound images for analysis, each image was resized to 256 × 256 pixels and then cropped to 224 × 224 pixels to focus on the central area where lesions are usually found. To improve image quality and reduce noise, we applied Gaussian filtering, which gently smooths the images, and Sobel filtering to highlight edges. We also enhanced contrast through histogram equalization, making important features more visible. To address class imbalances, we augmented the images by randomly flipping, rotating, and adjusting colors, particularly for less common categories like malignant and normal cases. Additionally, the pixel values were normalized to match the pre-trained EfficientNet-B7 model’s requirements, ensuring consistent and high-quality input data for accurate classification. These preprocessing steps were designed to enhance model robustness and improve classification accuracy. (Eq. 1) blurs the image I by convolving it with a Gaussian kernel \(\:G\)σ, where σ controls the spread of the blur. (Eq. 2) Computes the gradient E of the image I, which highlights the edges and transitions within the image. (Eq. 3) Applies histogram equalization to the image I to enhance contrast by redistributing pixel intensities. Figure 4 shows the Examples of breast ultrasound images showing (a) Actual Image, (b) Mask, and (c) Overlayed Image for different categories, benign, malignant, and normal.

In this study, each ultrasound image was first resized to 256 × 256 pixels to ensure a consistent input size across the dataset, followed by center-cropping to 224 × 224 pixels to focus on the central area, which typically contains the lesions of interest. This process, as shown in (Eq. 4), standardizes the input for the EfficientNet-B7 model while preserving critical features, as depicted in (Eq. 5). To address the issue of class imbalance, particularly for the less common malignant and normal classes, we applied various data augmentation techniques. These included a 90% probability of horizontal flipping (Eq. 6) and random rotations within ± 15 degrees (Eq. 7), simulating different orientations and enhancing the model’s ability to handle varied ultrasound scan conditions. Additionally, we adjusted the brightness, contrast, saturation, and hue of the images (Eqs. 8–11) to simulate different lighting conditions and color variations, making the model more robust. The study employed advanced augmentation methods, ensuring the model could effectively classify high-resolution images and learn key features. Moreover, the integration of Explainable AI techniques, such as Grad-CAM, provided visual explanations for the model’s decisions, helping clinicians understand which areas of the image influenced the classification. These methods enhance the model’s accuracy and reliability, making it a valuable tool for improving diagnostic outcomes in clinical settings. Table 3 outlines the parameters for the augmentation techniques, and Fig. 5 shows their impact on a medical ultrasound image.

where p=0.9.

where β∈[0.8,1.2].

where c∈[0.8,1.2].

where s∈[0.8,1.2].

where h∈[− 0.1,0.1].

The pixel values of the images were normalized using a mean of [0.485, 0.456, 0.406] and a standard deviation of [0.229, 0.224, 0.225]. This normalization step was critical to standardize the input data distribution, aligning with the pre-trained EfficientNet-B7 model’s expected input format. Normalizing the pixel values ensured that the model training process was stable and efficient, facilitating better convergence and improved model performance. (Eq. 12) shows the Adjustment of the image pixel values based on the mean and standard deviation, ensuring the data distribution matches the model’s training conditions. (Eq. 13) Applies batch normalization to the image I to stabilize and accelerate training by normalizing the pixel values.

where μ=[0.485,0.456,0.406] and σ=[0.229,0.224,0.225].

Proposed methodology

In the proposed methodology, the EfficientNet-B7 model is fine-tuned on the BUSI dataset to classify breast ultrasound images into benign, malignant, and normal categories as shown in algorithm 1. The methodology employs advanced data augmentation techniques, including RandomHorizontalFlip, RandomRotation, and ColorJitter, specifically targeting minority classes to enhance data diversity and model robustness. An early stopping mechanism is integrated to prevent overfitting, ensuring optimal model performance and generalization to new data.

Algorithm 1 EfficientNet-B7 based breast ultrasound image classification

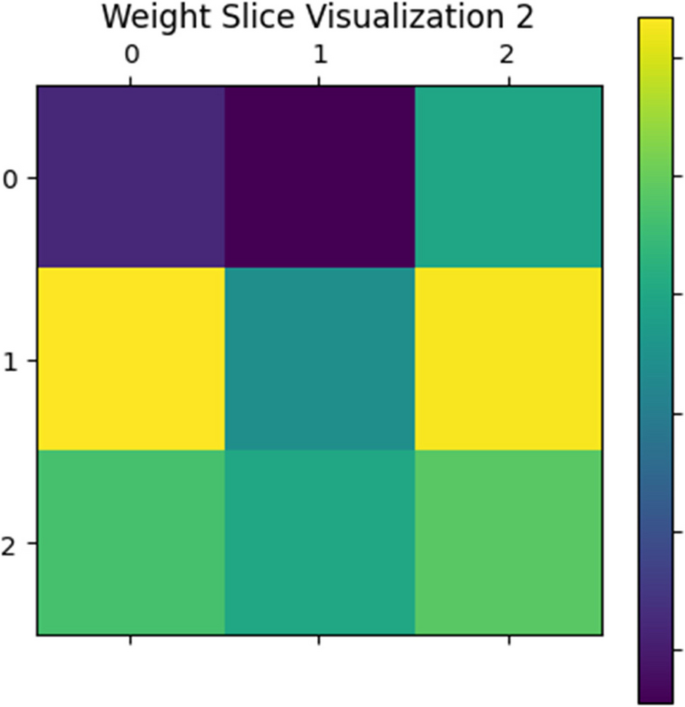

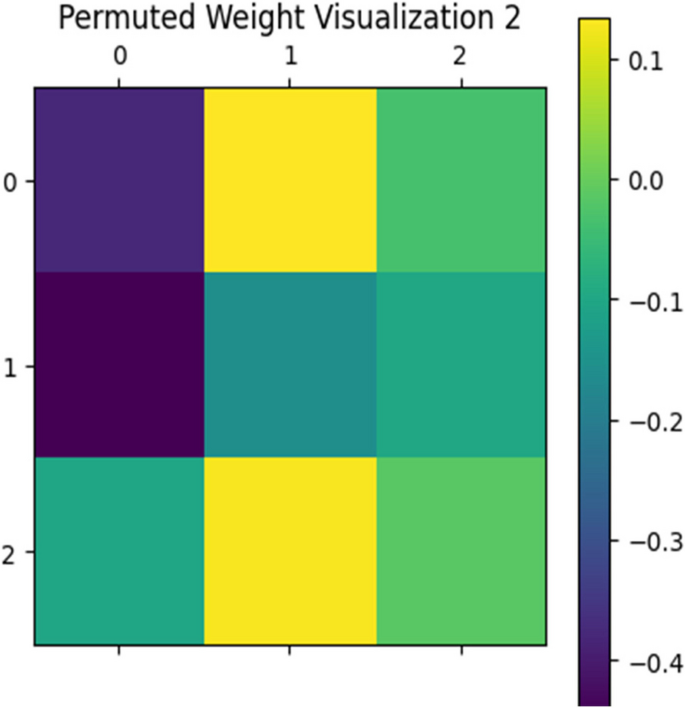

The core model utilized in this study is EfficientNet-B7, a cutting-edge convolutional neural network known for its remarkable balance between accuracy and computational efficiency. EfficientNet-B7 is part of the EfficientNet family, which scales network dimensions—depth, width, and resolution—uniformly using a compound scaling method, resulting in a model that achieves superior performance with fewer parameters compared to traditional architectures [18]. This model is particularly well-suited for medical image analysis due to its ability to capture intricate patterns and features in high-resolution images, which is crucial for accurately distinguishing between benign, malignant, and normal breast ultrasound images. The Fig. 6 depicts Weight slice Visualization 2 and Fig. 7 depicts Permuted Weight Visualization 2.

where d, w, and r are depth, width, and resolution.

where I is input, K is kernel, b is bias.

where p is the dropout probability.

In this study, the entire EfficientNet-B7 architecture was fine-tuned rather than freezing the initial layers and only training the final layers. This comprehensive fine-tuning approach allows the model to adapt more effectively to the unique characteristics of breast ultrasound images, which may differ significantly from the images the model was originally pre-trained on (e.g., ImageNet). Fine-tuning all layers helps the model to learn more domain-specific features, enhancing its ability to accurately classify the images. This approach enables the model to adjust its learned features across all layers, leading to improved performance in the specific task of breast ultrasound image classification.

To prevent overfitting, an early stopping mechanism was implemented in the training process. Early stopping monitors the validation loss and halts training if there is no improvement after a specified number of epochs, which is referred to as patience. In this study, a patience of 2 epochs was set, meaning the training would stop if the validation loss did not decrease for two consecutive epochs. This technique helps to avoid overfitting by ensuring that the model does not continue to learn from noise in the training data, thereby maintaining a good generalization capability. Early stopping not only prevents overfitting but also optimizes computational resources by stopping training when further improvements are unlikely. (Eq. 23) Specifies the early stopping criterion based on validation loss \(\:{L}_{\text{val}}\), halting training if no improvement is observed for p epochs.

The model was trained using a cross-entropy loss function, which is well-suited for multi-class classification problems like this one. Cross-entropy loss measures the performance of a classification model whose output is a probability value between 0 and 1, quantifying the difference between the predicted probabilities and the actual class labels. The Adam optimizer, known for its computational efficiency and low memory requirements, was chosen to update the model weights. The learning rate was set to 0.00005, balancing the need for significant updates with the stability required for convergence. Additionally, a learning rate scheduler with a step size of 7 and a gamma of 0.1 was employed [19]. This scheduler reduces the learning rate by a factor of 0.1 every 7 epochs, allowing the model to fine-tune its weights more delicately as training progresses, which is particularly useful in avoiding overshooting the minima of the loss function. (Eq. 24) Defines the cross-entropy loss function, measuring the discrepancy between predicted probabilities and true labels for classification tasks. (Eq. 25) Updates the moving average of gradients \(\:{m}_{t}\) in the Adam optimizer with decay rate \(\:{{\upbeta\:}}_{1}\). (Eq. 26) Updates the moving average of squared gradients \(\:{v}_{t}\) in the Adam optimizer with decay rate \(\:{{\upbeta\:}}_{2}\). (Eq. 27) Computes the bias-corrected first moment estimate \(\:\widehat{{m}_{t}}\) in Adam optimization. (Eq. 28) Computes the bias-corrected second moment estimate \(\:\widehat{{v}_{t}}\) in Adam optimization. (Eq. 29) Updates the model parameters θ in the Adam optimizer using the learning rate 𝛼. (Eq. 30) Adjusts the learning rate 𝛼 by multiplying the old learning rate with a specified factor. Table 4 shows the lists of the key parameters and settings used during the training of the EfficientNet-B7 model.

Training and validation

The training process for the EfficientNet-B7 model involves fine-tuning on the BUSI dataset with a focus on classifying breast ultrasound images into benign, malignant, and normal categories. Data augmentation techniques such as RandomHorizontalFlip, RandomRotation, and ColorJitter are employed to address class imbalance and enhance the diversity of training data. The model is trained using a cross-entropy loss function, with the Adam optimizer managing weight updates, and a learning rate scheduler adjusting the learning rate to refine training. Early stopping is implemented to monitor validation loss, halting training if no improvement is observed over two consecutive epochs, thereby preventing overfitting. Throughout the training, performance metrics including precision, recall, F1-score, and accuracy are evaluated, ensuring the model’s robustness and effectiveness in accurately classifying ultrasound images.

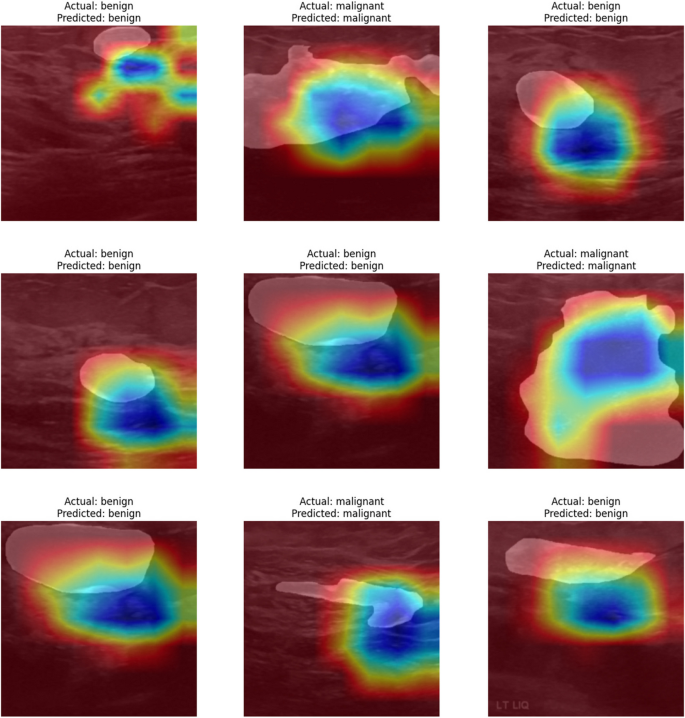

Grad-CAM as explainable AI

Gradient-weighted Class Activation Mapping (Grad-CAM) is a powerful explainable AI technique that provides visual explanations for deep learning model predictions, particularly in the context of image classification. Grad-CAM generates a heatmap that highlights the regions of an input image that are most influential in the model’s decision-making process. It works by computing the gradients of the target class score with respect to the feature maps of a specific convolutional layer. These gradients are then used to weight the feature maps, emphasizing the areas that have the most significant impact on the prediction. By overlaying this heatmap on the original image, Grad-CAM produces a visual representation that makes it easier to understand which parts of the image the model is focusing on. This transparency is crucial in medical imaging applications, such as breast ultrasound classification, as it allows clinicians to validate the model’s decisions and ensures that the model is considering clinically relevant features. Grad-CAM thus enhances the interpretability of deep learning models, building trust and facilitating their integration into clinical workflows [20]. (Eq. 31) Computes the importance weights \(\:{{\upalpha\:}}_{k}^{c}\) of the feature map \(\:{A}^{k}\) for the class 𝑐c using gradients. (Eq. 32) Generates the Grad-CAM heatmap \(\:{L}_{\text{Grad-CAM}}^{c}\) by weighting feature maps \(\:{A}^{k}\:\)with their importance \(\:{{\upalpha\:}}_{k}^{c}\) and applying ReLU. (Eq. 33) Defines Shapley value \(\:{{\upvarphi\:}}_{i}\:\)for feature \(\:i\), measuring its contribution to the prediction by averaging its marginal contributions across all possible subsets 𝑆. (Eq. 34) Computes the gradient \(\:{g}_{t}\) of the loss function L with respect to parameters θ at time t. (Eq. 35) Updates the parameters θ using gradient descent with learning rate η. Table 5 shows the parameters and settings used for generating Grad-CAM heatmaps, enhancing the interpretability of the model’s predictions. Figure 8 shows the Grad-CAM heatmap visualization highlighting important regions influencing classification decisions.

Statistical analysis

This analysis rigorously evaluates a breast ultrasound classification model using key metrics such as precision, recall, F1-score, and ROC-AUC, alongside error metrics like MAE, MSE, and RMSE, to ensure robust and accurate performance. Precision measures the model’s accuracy in predicting true positive cases, while recall assesses its ability to capture all relevant instances. The F1-score balances precision and recall, providing a single effectiveness measure, and ROC curves with AUC values offer a comprehensive view of the model’s diagnostic capabilities across different classes (benign, malignant, normal). The confusion matrix visualizes the model’s performance, identifying areas for improvement, and the inclusion of error metrics like MAE, MSE, and RMSE further quantifies prediction accuracy and sensitivity to outliers. These combined metrics offer a thorough assessment of the model’s reliability and effectiveness in clinical applications, particularly in minimizing false positives and ensuring early detection in medical diagnosis.

Experimentation & results

The experimentation with the EfficientNet-B7 model on the BUSI dataset demonstrated exceptional performance in classifying breast ultrasound images into benign, malignant, and normal categories. The model achieved high precision, recall, and F1-scores across all classes, with an overall accuracy of 99.14%. Error metrics, including MAE, MSE, and RMSE, indicated minimal prediction errors, underscoring the model’s robustness.

Different metrics

In this study, we employed a comprehensive set of evaluation metrics to assess the performance of the EfficientNet-B7 model on breast ultrasound image classification. These metrics included Precision, which indicates the proportion of correctly predicted positive observations to the total predicted positives, providing insight into the model’s accuracy regarding true positive identification, crucial for minimizing false positives in medical diagnoses. Recall, also known as sensitivity, measures the proportion of actual positives correctly identified by the model, essential in medical imaging to ensure all true cases, such as malignant tumors, are detected. The F1-Score, the harmonic mean of precision and recall, offers a balanced measure of both false positives and false negatives, particularly valuable for imbalanced datasets. The Confusion Matrix presents a detailed breakdown of true positives, true negatives, false positives, and false negatives for each class, offering a clear visualization of the model’s performance across all categories. The ROC-AUC metric, with the ROC curve plotting the true positive rate against the false positive rate, provides a visual representation of the model’s diagnostic ability across various thresholds, with the AUC quantifying this ability, where values closer to 1 indicate superior performance. Additionally, Error Metrics such as Mean Absolute Error (MAE), Mean Squared Error (MSE), and Root Mean Squared Error (RMSE) were used to provide quantitative measures of prediction accuracy and error magnitude [21, 22]. Table 6 Summarizes the error metrics, including MAE, MSE, and RMSE, to assess prediction accuracy.

Observed results

The results from the experimentation with the EfficientNet-B7 model on breast ultrasound image classification were outstanding. Precision values were exceptionally high, with 1.000 for benign, 1.0 for malignant, and 0.952 for normal classes, resulting in a macro average precision of 0.984 and a weighted average precision of 0.992, demonstrating high accuracy in predicting true positives. Recall values were 0.985 for benign, 1.0 for malignant, and 1.0 for normal, yielding a macro average of 0.995 and a weighted average of 0.991, reflecting the model’s excellent capability to identify all actual positive cases. The F1-scores were 0.992 for benign, 1.0 for malignant, and 0.976 for normal, with a macro average of 0.989 and a weighted average of 0.992, indicating a strong balance between precision and recall. The model achieved an overall accuracy of 0.991, showcasing robust performance across all classes. Error metrics were also favourable, with a Mean Absolute Error (MAE) of 0.017, Mean Squared Error (MSE) of 0.034, and Root Mean Squared Error (RMSE) of 0.185, indicating minimal prediction errors. The ROC-AUC scores were perfect, with 1.00 for benign, malignant, and normal classes, highlighting the model’s excellent discriminative ability. These results demonstrate the high effectiveness of the EfficientNet-B7 model in classifying breast ultrasound images, with exceptional precision, recall, and overall accuracy, further confirmed by the minimal prediction errors indicated by the error metrics. Figure 9 displaying the classification results for benign, malignant, and normal cases, illustrating true positives, false negatives, and false positives. Figure 10 shows precision, recall, and F1-score for benign, malignant, and normal classes, including accuracy, macro average, and weighted average metrics. Figure 11 shows the true positive rate versus false positive rate for benign, malignant, and normal classes, with the area under the curve (AUC) values.

Comparison with existing approaches

The EfficientNet-B7 model for breast ultrasound image classification shows several clear advantages over older methods. It delivers higher accuracy and precision compared to traditional machine learning models and earlier deep learning approaches, which often struggle with false positives and false negatives. The model achieves better ROC-AUC scores, reflecting its superior ability to distinguish between different classes, whereas older models typically score lower due to less advanced feature extraction. Its error metrics, including low Mean Absolute Error (MAE), Mean Squared Error (MSE), and Root Mean Squared Error (RMSE), indicate that it makes more accurate predictions with fewer mistakes. Additionally, EfficientNet-B7 uses Grad-CAM to provide visual explanations of its decisions, improving transparency and trust in clinical settings. Table 7; Fig. 12 show that the EfficientNet-B7 model outperforms other methods, achieving an accuracy of 99.18% and a precision of 96.21%.

This study shows, EfficientNet-B7 model demonstrates superior performance in breast ultrasound image classification, achieving the highest accuracy of 99.14% compared to various traditional and deep learning models.

Discussion

The utilization of EfficientNet-B7, renowned for its scalable architecture, ensures that our model can handle high-resolution medical images effectively, crucial for detailed feature extraction in breast ultrasound images. By incorporating comprehensive data augmentation techniques like RandomHorizontalFlip, RandomRotation, and ColorJitter, we enhance the model’s robustness against variations in image presentation, which is pivotal for generalizing across different clinical environments. Moreover, the application of thorough preprocessing and normalization ensures consistent input quality, facilitating stable model training and convergence. Our strategy of fine-tuning the entire network, coupled with early stopping, allows for precise model adjustment to the dataset while preventing overfitting, ensuring the model remains applicable and reliable for further analytical pursuits. This robust analytical framework is supported by detailed performance evaluations using metrics such as precision, recall, F1-scores, and confusion matrices, providing a deep insight into the model’s diagnostic capabilities and ensuring its efficacy and reliability for extended clinical and research applications.

The integration of EfficientNet-B7 in our diagnostic framework optimizes the processing of high-resolution breast ultrasound images, capturing crucial pathological features accurately. Extensive data augmentation and rigorous preprocessing protocols enhance the model’s robustness and consistency across varied clinical imaging conditions. By fine-tuning the entire network and employing early stopping mechanisms, our approach ensures deep learning tailored to the specific nuances of ultrasound imagery and maintains model generalizability. These methodological choices bolster the model’s role not only as a diagnostic tool but also as a platform for ongoing clinical research, confirming its potential to improve patient outcomes by enabling early and precise detection of breast pathologies.

Incorporating the EfficientNet-B7 model combined with sophisticated image processing techniques offers substantial advantages for real-time medical systems, particularly in diagnostic applications. The architecture of EfficientNet-B7 balances accuracy and computational efficiency, essential for the swift and reliable diagnosis required in medical emergencies. This model’s robustness against diverse image presentations and lighting conditions, achieved through advanced data augmentation techniques like RandomHorizontalFlip, RandomRotation, and ColorJitter, ensures its effectiveness across varying clinical settings. Additionally, the integration of Explainable AI (XAI) methods such as Grad-CAM enhances transparency, allowing clinicians to understand and trust AI-assisted diagnostic decisions. These features make the classification method highly suitable for real-time applications in medical settings, supporting rapid clinical decision-making and potentially improving patient outcomes by reducing the time to diagnosis and treatment initiation.

The EfficientNet-B7 model and its techniques demand significant computational resources, which might not be accessible in all settings. Its performance depends on the quality and diversity of the training data, with any biases potentially impacting results. Although Grad-CAM offers visual explanations, they may be difficult for clinicians to interpret. Integrating this complex system into clinical workflows necessitates careful planning and validation. Additionally, the model is tailored for breast ultrasound images and may require adjustments for use with other types of medical imaging.

Conclusion

This study presents a robust approach to classifying breast ultrasound images into benign, malignant, and normal categories, utilizing the advanced EfficientNet-B7 architecture. By leveraging sophisticated data augmentation techniques, including RandomHorizontalFlip, RandomRotation, and ColorJitter, this methodology effectively addresses the challenges of class imbalance and enhances the model’s robustness. Fine-tuning EfficientNet-B7 on the BUSI dataset, combined with an early stopping mechanism, resulted in a remarkable classification accuracy of 99.14%. This approach not only improves the model’s performance but also integrates Explainable AI (XAI) technique like Grad-CAM, which enhance the interpretability of the model’s predictions. These XAI methods provide critical insights into the specific regions and features of ultrasound images that influence the classification outcomes, thereby increasing the model’s transparency and trustworthiness in clinical settings. The findings of this study indicate that integrating scalable CNN architectures like EfficientNet-B7 with advanced augmentation and XAI techniques can substantially improve automated diagnostic systems, supporting early detection and treatment of breast cancer. Future work could expand this methodology to larger and more diverse datasets to further validate the model’s generalizability across different imaging conditions and patient demographics. Additionally, exploring the integration of other state-of-the-art architectures and ensemble learning techniques could enhance classification performance. Expanding this approach to other medical imaging modalities and incorporating real-time processing capabilities would be valuable extensions. Furthermore, developing a comprehensive framework for integrating these advanced models into clinical workflows, including real-time deployment and user-friendly interfaces for clinicians, would be critical to facilitate broader clinical adoption. The proposed EfficientNet-B7 based framework demonstrates significant advancements in breast ultrasound image classification, achieving exceptional accuracy and providing interpretable insights through XAI techniques. This work underscores the potential of leveraging advanced deep learning architectures and targeted data augmentation strategies to enhance the diagnostic accuracy and reliability of automated systems in medical imaging. By improving the classification of breast ultrasound images, this study contributes significantly to the early detection and diagnosis of breast cancer, offering a promising tool to support clinicians in making informed and accurate decisions. Ultimately, this approach aims to improve patient outcomes and treatment efficacy, highlighting the crucial role of advanced AI technologies in advancing healthcare diagnostics.

Availability of data and materials

The data that support the findings of this study are openly available at https://www.kaggle.com/datasets/aryashah2k/breast-ultrasound-images-dataset.

References

Yadav N, Dass R, Virmani J. Objective assessment of segmentation models for thyroid ultrasound images. J Ultrasound. 2023;26(3):673–85.

Yadav N, Dass R, Virmani J. Despeckling filters applied to thyroid ultrasound images: a comparative analysis. Multimedia Tools Appl. 2022;81(6):8905–37.

Kriti VJ, Agarwal R. Assessment of despeckle filtering algorithms for segmentation of breast tumours from ultrasound images. Bioinform Biomed Eng. 2018;39:100–21.

Dass R, Yadav N. Image quality assessment parameters for despeckling filters. Procedia Comput Sci. 2020;167:2382–92.

Yadav N, Dass R, Virmani J. Deep learning-based CAD system design for thyroid tumor characterization using ultrasound images. Multimedia Tools Appl. 2024;83(14):43071–113.

Yadav N, Dass R. Virmani J A systematic review of machine learning based thyroid tumor characterisation using ultrasonographic images. J Ultrasound. 2024;27:1–16.

Mishra AK, Roy P, Bandyopadhyay S, Das SK. A multi-task learning based approach for efficient breast cancer detection and classification. Expert Syst. 2022;39(9):e13047.

Sahu A, Das PK, Meher S. An efficient deep learning scheme to detect breast cancer using mammogram and ultrasound breast images. Biomed Signal Process Control. 2024;87:105377.

Balaha HM, Saif M, Tamer A, Abdelhay EH. Hybrid deep learning and genetic algorithms approach (HMB-DLGAHA) for the early ultrasound diagnoses of breast cancer. Neural Comput Appl. 2022;34(11):8671–95.

Ali MD, Saleem A, Elahi H, Khan MA, Khan MI, Yaqoob MM, Al-Rasheed A. Breast cancer classification through meta-learning ensemble technique using convolution neural networks. Diagnostics. 2023;13(13):2242.

PACAL İ. Deep learning approaches for classification of breast cancer in ultrasound (US) images. J Inst Sci Technol. 2022;12(4):1917–27.

Alzahrani Y, Boufama B. Deep learning approach for breast ultrasound image segmentation. In: In 2021 4th International Conference on Artificial Intelligence and Big Data (ICAIBD). 2021. p. 437–42 IEEE.

Sahu A, Das PK, Meher S, Panda R, Abraham A. An efficient deep learning-based breast cancer detection scheme with small datasets. In International Conference on Intelligent Systems Design and Applications. Springer Nature Switzerland: Cham; 2022. p. 39–48.

Sahu A, Das PK, Meher S. High accuracy hybrid CNN classifiers for breast cancer detection using mammogram and ultrasound datasets. Biomed Signal Process Control. 2023;80:104292.

Abhisheka B, Biswas SK, Purkayastha B. A comprehensive review on breast cancer detection, classification and segmentation using deep learning. Arch Comput Methods Eng. 2023;30(8):5023–52.

Pramanik P, Mukhopadhyay S, Kaplun D, Sarkar R. A deep feature selection method for tumor classification in breast ultrasound images. In: International conference on mathematics and its applications in new computer systems. Cham, Switzerland: Springer International Publishing; 2021. p. 241–52.

Cruz-Ramos C, García-Avila O, Almaraz-Damian JA, Ponomaryov V, Reyes-Reyes R, Sadovnychiy S. Benign and malignant breast tumor classification in ultrasound and mammography images via fusion of deep learning and handcraft features. Entropy. 2023;25(7):991.

Chen N, Han B, Li Z, Wang H. Breast Cancer prediction based on the CNN models. Highlights Sci Eng Technol. 2023;34:103–9.

Al Moteri M, Mahesh TR, Thakur A, Vinoth Kumar V, Khan SB, Alojail M. Enhancing accessibility for improved diagnosis with modified EfficientNetV2-S and cyclic learning rate strategy in women with disabilities and breast cancer. Front Med. 2024;11:1373244.

Naas M, et al. A Deep Learning Based Computer Aided Diagnosis (Cad) Tool Supported by Explainable Artificial Intelligence for Breast Cancer Exploration. 2024. https://doi.org/10.2139/ssrn.4689420.

Albalawi E, Thakur A, Ramakrishna MT, Khan B, SankaraNarayanan S, Almarri S, B., Hadi TH. Oral squamous cell carcinoma detection using EfficientNet on histopathological images. Front Med. 2024;10:1349336.

Thakur A, Gupta M, Sinha DK, Mishra KK, Venkatesan VK, Guluwadi S. Transformative breast Cancer diagnosis using CNNs with optimized ReduceLROnPlateau and Early stopping Enhancements. Int J Comput Intell Syst. 2024;17(1):14.

Umer MJ, Sharif M, Wang SH. (2022). Breast cancer classification and segmentation framework using multiscale CNN and U-shaped dual decoded attention network. Expert Syst, 2022;14:e13192.

Taheri M, Omranpour H. Breast cancer prediction by ensemble meta-feature space generator based on deep neural network. Biomed Signal Process Control. 2024;87:105382.

Podda AS, Balia R, Barra S, Carta S, Fenu G, Piano L. Fully-automated deep learning pipeline for segmentation and classification of breast ultrasound images. J Comput Sci. 2022;63:101816.

Pathan RK, Alam FI, Yasmin S, Hamd ZY, Aljuaid H, Khandaker MU, Lau SL. Breast cancer classification by using multi-headed convolutional neural network modeling. Healthcare. 2022;10:2367.12.

Gupta S, Agrawal S, Singh SK, Kumar S. A novel transfer learning-based model for ultrasound breast cancer image classification. In: Computational Vision and Bio-Inspired Computing: Proceedings of ICCVBIC 2022. Singapore: Springer Nature Singapore; 2023. p. 511–23.

Jabeen K, Khan MA, Alhaisoni M, Tariq U, Zhang YD, Hamza A, Damaševičius R. Breast cancer classification from ultrasound images using probability-based optimal deep learning feature fusion. Sensors. 2022;22(3):807.

Hamdy E, Zaghloul MS, Badawy O. Deep learning supported breast cancer classification with multi-modal image fusion. In: 2021 22nd International Arab Conference on Information Technology (ACIT). 2021. p. 1–7 IEEE.

Sathishkumar R, Vinothini B, Rajasri N, Govindarajan M. Detection and classification of breast cancer from Ultrasound images using NASNet Model. Int J Comput Digit Syst. 2024;16(1):1–13.

Gurmessa KD, Jimma W. Explainable machine learning for breast cancer diagnosis from mammography and ultrasound images: a systematic review. BMJ Health Care Inf. 2024;31(1):e100954.

Sasirekha N, et al. Breast Cancer detection using histopathology image with Mini-batch Stochastic Gradient descent and convolutional neural network. IFS. 2023;1:4651–67.

Ahmed FM, Mohammed DBS. Feasibility of breast Cancer detection through a Convolutional Neural Network in Mammographs. Tamjeed J Healthc Eng Sci Technol. 2023;1(2):36–43.

Kalafi EY, Jodeiri A, Setarehdan SK, Lin NW, Rahmat K, Taib NA, Dhillon SK. Classification of breast cancer lesions in ultrasound images by using attention layer and loss ensemble in deep convolutional neural networks. Diagn. 2021;11(10):1859.

Zhang J, Zhang Z, Liu H, Xu S. SaTransformer: semantic-aware transformer for breast cancer classification and segmentation. IET Image Proc. 2023;17(13):3789–800.

Mahoro E, Akhloufi MA. Breast cancer classification on thermograms using deep CNN and transformers. Quant InfraRed Thermography J. 2024;21(1):30–49.

Wan Y, et al. D-TransUNet: A Breast Tumor Ultrasound Image Segmentation Model Based on Deep Feature Fusion. J Artif Intell Med Sci. 2024;0(0):00. https://doi.org/10.55578/joaims.240226.001.

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

M.L and P.S.K took care of the review of literature and methodology. R.R.C and M.T.R have done the formal analysis, data collection and investigation. V.K.V has done the initial drafting and statistical analysis. S.G has supervised the overall project. All the authors of the article have read and approved the final article.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Na.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Latha, M., Kumar, P.S., Chandrika, R.R. et al. Revolutionizing breast ultrasound diagnostics with EfficientNet-B7 and Explainable AI. BMC Med Imaging 24, 230 (2024). https://doi.org/10.1186/s12880-024-01404-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-024-01404-3