- Systematic Review

- Open access

- Published:

Deep learning-based techniques for estimating high-quality full-dose positron emission tomography images from low-dose scans: a systematic review

BMC Medical Imaging volume 24, Article number: 238 (2024)

Abstract

This systematic review aimed to evaluate the potential of deep learning algorithms for converting low-dose Positron Emission Tomography (PET) images to full-dose PET images in different body regions. A total of 55 articles published between 2017 and 2023 by searching PubMed, Web of Science, Scopus and IEEE databases were included in this review, which utilized various deep learning models, such as generative adversarial networks and UNET, to synthesize high-quality PET images. The studies involved different datasets, image preprocessing techniques, input data types, and loss functions. The evaluation of the generated PET images was conducted using both quantitative and qualitative methods, including physician evaluations and various denoising techniques. The findings of this review suggest that deep learning algorithms have promising potential in generating high-quality PET images from low-dose PET images, which can be useful in clinical practice.

Introduction

Positron Emission Tomography (PET) is a cutting-edge nuclear medicine imaging modality that generates detailed 3D images. It offers valuable semi-quantitative and metabolic insights into a patient's body by employing a range of radiotracers. These include Fluorodeoxyglucose (18F-FDG), which is widely used, as well as Gallium-68 (68 Ga) in conjunction with compounds like PSMA and DOTATATE, among others. This technique enables clinicians to visualize and assess various physiological processes at the molecular level, providing essential information for diagnosis, treatment planning, and monitoring of diseases such as cancer. The PET system detects pairs of gamma rays that are emitted indirectly by a radioactive tracer introduced into the body. This tracer is attached to a molecule that can be either non-metabolically or metabolically active. Then, three-dimensional (3D) PET images of distribution of radiopharmaceutical concentration within the human body are reconstructed by computer analysis. Since the introduction of PET imaging scanner in the early 1970s, it has been widely used to diagnose diseases and to analyze metabolic processes in the body in various medical fields such as oncology, neurology, cardiology, etc. [1].

In PET imaging, there is a tendency to choose a high injection dose of radionuclide or to increase the scanning time to form high-quality PET images; which poses a high risk of radiation damage to patients and healthcare providers. The risk is more serious for children and patients who undergo multiple PET scans during their treatment. However, given the concern about internal radiation exposure, it is desirable to reduce injected radioactivity, but image quality is compromised as a result of this reduction in radiation dose. It is clear that the quality of a low-dose PET (LD PET) image will be inferior to that of a full-dose PET (FD PET) image due to the factors mentioned that occur during the acquisition process. As a result, the LD PET image will exhibit increased noise and potentially unnecessary artifacts, along with a decreased signal-to-noise ratio (SNR) [2].

A range of methods [3,4,5,6] have been proposed to improve the image quality and reduce PET image noise and artifacts, which can be generally classified into two categories: image post-processing [3, 7] and incorporation into iterative reconstruction [5, 6, 8, 9]. Image post-processing methods apply filters or statistical reconstruction algorithms, but they may introduce blurring or distortions. Iterative reconstruction methods incorporate noise reduction techniques into the reconstruction process, such as Penalized Likelihood or Time-of-Flight PET, to improve contrast, resolution, and lesion detectability. Resolution modeling can also improve spatial resolution and reduce noise. The choice of method depends on the scanner, acquisition protocol, and clinical application.

In recent years, deep learning methods have shown an explosive popularity in medical imaging fields to reduce the noise of LD PET images and restore the image quality to the same level as FD PET. These methods have several advantages over traditional image post-processing and iterative reconstruction methods, including higher image quality, shorter processing times, and greater generalizability.

Therefore, this systematic review aims to provide a comprehensive overview of the current state-of-the-art in deep learning-based techniques for estimating FD PET images from LD scans and to identify the challenges and opportunities for future research in this area.

Materials and methods

Literature Search

This study was performed using the Preferred Reporting Items for Systematic Reviews [10]. The study protocol was registered in PROSPERO (CRD42022370329). A systematic search was conducted in different databases including PubMed, IEEE, SCOPUS, Web of Science, databases through July 2023. The search algorithm, which can be found in Table 1, was adapted to include combinations of equivalent terms. Additionally, the reference lists of related articles were scrutinized using the snowball method to identify further relevant articles.

Inclusion and Exclusion

Eligible articles were considered based on the following inclusion criteria:

-

a)

Studies should present the development of at least one DL model for denoising of LD PET images referring to any organs of humans.

-

b)

These comparative studies should include the quality comparison of PET images between images obtained from deep learning models and FFD PET ones.

-

c)

Articles should report original studies and not reviews/meta-analyses/editorial and letters, concern humans and be written in English.

Quality assessment

The quality assessment of the included articles was conducted using the Checklist for Artificial Intelligence in Medical Imaging (CLAIM) [11]. The CLAIM is a 42-item checklist designed to evaluate medical imaging AI studies. For each item, studies were scored on a 2-point scale (0 or 1), and the CLAIM score was calculated by summing up the scores for each study. Notably, all items in the checklist were equally weighted.

Study selection

The study was conducted in collaboration with all authors of this study. For the process of selecting papers two authors of this study evaluated papers. In initial evaluation, first the titles and abstracts of papers were examined. In this stage, papers that are not relevant to the search topic were excluded. Then, a complete review of the full text of the papers was performed, and papers examining the development of deep learning algorithms on reducing the noisy and increasing the quality of images in LD PET imaging were included. Any disagreements in the selection process were discussed with a third member of the research team for consensus.

Data extraction

Subsequently, the key characteristics of the studies were captured, and data was extracted, including: (a) first author and year of publication, (b) number of samples, (c) study design, (d) demographic and clinical characteristics of patients, (e) dose reduction factor, (f) the architecture of proposed deep learning algorithm, (g) data preprocessing steps, (h) evaluation methods and image quality metrics. If more than one algorithm was investigated in one study, we would extract data from the algorithm with the highest performance.

Data and statistical analysis

The data were aggregated and summarized descriptively and synthesized in narrative and tabular forms. The data were tabulated to present general information about the studies including authors, year, paper type, research method, intervention, outcomes.

Results

Search results

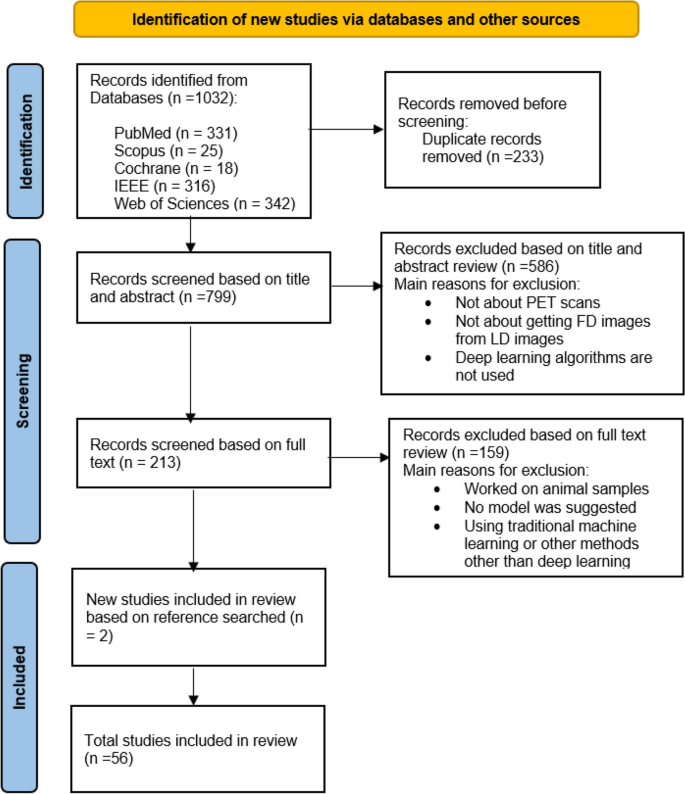

The initial systematic search identified 1032 studies. After removing the duplicates, 799 articles were retrieved for title and abstract assessment, and 213 articles were selected for full-text evaluation. 159 articles were excluded if they did not have a proposed architecture, they only compared different architectures, or their dataset was animal. Finally, 56 articles published between 2017 and 2023 were included in this systematic review, two of which were obtained from the reference search of articles by snowballing. The aim of all studies was to demonstrate the potential of LD to FD conversion by deep learning algorithms. The flowchart of selection for included studies is demonstrated in Fig. 1.

Quality assessment

Table 2 presents a summary of the quality assessment for the included studies using the CLAIM tool.

Dataset characteristics

The studies obtained have conducted both prospective and retrospective analyses. Among these, Studies [16, 26,27,28,29, 37, 40, 41, 64, 66] were retrospective, while the others were prospective. The analyses involved varying sizes of datasets, with the study by Kaplan et al. having the smallest dataset, consisting of only 2 patients. The majority of the studies analyzed fewer than 40 patients. However, the studies with the most substantial sample sizes were numbers [7, 66] and [54], which included 311 and 587 samples, respectively. (Please refer to Table 3 for further details, and additional information is available in the Supplementary material).

Datasets may be real world data or simulated as in the work of [30, 53, 60], including normal, diseased, or both subjects in different body regions. In this regard, the patients were scanned from the brain [12, 13, 15, 17, 18, 20, 21, 26,27,28,29, 31, 39,40,41, 46, 52, 53, 55, 60, 64, 65, 68], lung [19, 21, 25, 34], bowel [44], thorax [34, 45], breast [50], neck [57], abdomen [63] regions, and twenty two studies were conducted on whole body images [14, 16, 22, 23, 32, 33, 35,36,37,38, 42, 43, 48, 49, 51, 54, 58, 59, 61, 62, 66, 67]. PET data is acquired through the use of various scanners and the administration of different radiopharmaceuticals such as 18F-FDG, 18F-florbetaben, 68 Ga-PSMA, 18F-FE-PE2I, 11C-PiB, 18F-FDG, 18F-AV45, 18F-ACBC, 18F-DCFPyL, or amyloid radiopharmaceuticals. These radiopharmaceuticals are injected into the participants at doses ranging from 0.2% up to 50% of the full dose in order to estimate FD PET images.

In these studies, the data are pre-processed in order to prepare them as input to the model, and in some articles, data augmentation has been used to compensate for the lack of sample data [13, 18, 19, 47].

Design

In order to synthesize high-quality PET images using deep learning techniques, it is necessary to train a model to learn mapping between LD and FD PET images.

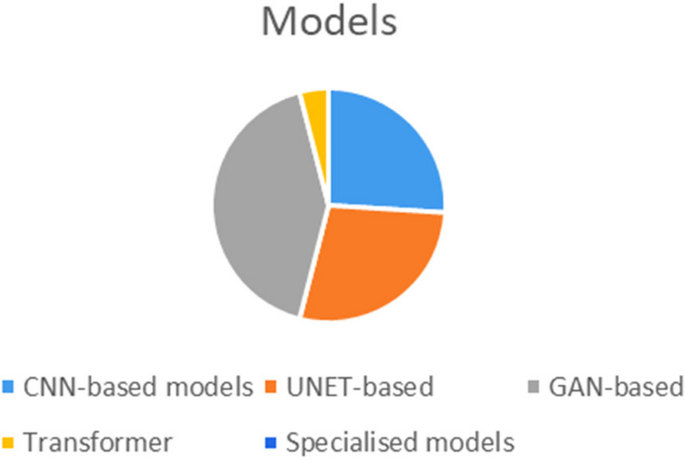

Several models based on CNN, UNET, generative adversarial network (GAN), have been proposed in various studies, with the GANs being the well-received method among them.

After a systematic literature review for medical imaging reconstruction and synthesis studies, this paper totally included thirteen CNN-based models ( [12,13,14, 21, 27, 31, 36, 42, 43, 51, 56, 58, 67]), fifteen UNET-based models ( [17, 19, 22, 26, 28, 37, 39, 40, 44, 45, 48, 55, 61, 62, 66]), twenty one GAN-based models ( [15, 16, 18, 20, 23,24,25, 29, 32, 33, 35, 38, 41, 46, 47, 49, 50, 52,53,54, 65]), two transformer models ( [60, 69]) and some other specialized models ( [48, 57, 59,60,61, 63, 68]) to discuss and reproduce for comparison. The frequency of models employed in the reviewed studies can be seen in Fig. 2.

To the best of our knowledge, Xiang et al. [12] were among the first to propose a CNN- based method in 2017 for FD PET image estimation called auto-context CNN. This approach combines multiple CNN modules following an auto-context strategy to iteratively refine the results. A residual CNN was developed by Kaplan et al. [14] in 2019, which integrated specific image features into the loss function to preserve edge, structural, and textural details for successfully eliminating noise from a 1/10th of a FD PET image.

In their work, Gong et al. [21] trained a kind of CNN using simulated data and fine-tuned it with real data to remove noise from PET images of the brain and lungs. In subsequent research, Wang et al. [36] conducted a similar study aimed at enhancing the quality of whole-body PET scans. They achieved this by employing a CNN in conjunction with corresponding MR images. Spuhler et al. [27] employed a variant of CNN with dilated kernels in each convolution, which improved the extraction of features. Mehranian et al. [30] proposed a forward backward splitting algorithm for Poisson likelihood and unrolled the algorithm into a recurrent neural network with several blocks based on CNN.

Researches has also demonstrated the strength of employing a CNN with a UNET structure for the production of high-fidelity PET images. Xu et al. [13] demonstrated in 2017 that a UNET network can be utilized to accurately map the difference between the LD-PET image and the reference FD-PET image by administering only a 1/200th of the injection. Notably, the skip connection of the UNET was specifically utilized to improve the efficient learning of image details. Chen et al. (2019) [17] suggested to combine both LD PET and multiple MRI as conditional inputs for the purpose of producing high quality and precise PET images utilizing a UNET architecture. Cui et al. [22] proposed an unsupervised deep learning method by UNET structure for PET image denoising, where the patient’s MR prior image is used as the network input and the noisy PET image is used as the training label. Their method does not require any high-quality images as training labels, nor any prior training or large datasets. In their study, Lu et al. [19] demonstrated that utilizing just 8 LD images of lung cancer patients generated from 10% of the corresponding FD images to train a 3D UNET model resulted in significant noise reduction and reduced bias in the detection of lung nodules. Sanaat et al. (2020) introduced a slightly different approach [26], demonstrating that by using UNET for learning a mapping between the LD-PET sinogram and the FD PET sinogram, it is possible to achieve some improvements in the reconstructed FD PET images. Also, by using UNET structure, Liu et al. [37] were able to reduce the noise of clinical PET images for very obese people to the noise level of thin people. The proposed model by Sudarshan et al. [40] uses UNET that incorporates the physics of the PET imaging system and the heteroscedasticity of the residuals in its loss function, leading to improved robustness to out-of-distribution data. In contrast to previous research that focused on specific body regions, Zhang et al. [62] propose a comprehensive framework for hierarchically reconstructing total-body FD PET images. This framework addresses the diverse shapes and intensity distributions of different body parts. It employs a deep cascaded U-Net as the global total-body network, followed by four local networks to refine the reconstruction for specific regions: head-neck, thorax, abdomen-pelvic, and legs.

On the other hand, more researchers design GAN-like networks for SPET image estimation. GANs have a more complex structure and can solve some problems attributed to CNNs, such as generating blurry results, with their structural loss. For example, Wang et al. [15] (2018) developed a comprehensive framework utilizing 3D conditional GANs with adding skip links to the original UNET network. Further their study in 2019 [20] specifically focused on multimodal GANs and local adaptive fusion techniques to enhance the fusion of multimodality image information in a more effective manner. Unlike two-dimensional (2D) models, the 3D convolution operation implemented in their framework prevents the emergence of discontinuous cross-like artifacts. According to a study conducted by Ouyang et al. (2019) [18], which employs a GAN architecture with a pretrained amyloid status classifier utilizing feature matching in the discriminator can produce comparable results even in the absence of MR information. Gong et al. implemented a GAN architecture called PT-WGAN [23], which utilizes a Wasserstein Generative Adversarial Network to denoise LD PET images. The PT-WGAN framework uses a parameter transfer strategy to transfer the parameters of a pre-trained WGAN to the PT-WGAN network. This allows the PT-WGAN network to learn from the pre-trained WGAN and improve its performance in denoising LD PET images. Hu et al. [35]in a similar work use Westerian GAN to directly predict the FD PET image from low-dose PET sinogram data. Xue et al. developed a deep learning method to recover high-quality images from LD PET scans using a conditional GAN model. The model was trained on 18F-FDG images from one scanner and tested on different scanners and tracers. Zhou et al. [49] proposed a novel segmentation guided style-based generative adversarial network for PET synthesis. This approach leverages 3D segmentation to guide the GANs, ensuring that the generated PET images are accurate and realistic. By integrating style-based techniques, the method enhances the quality and consistency of the synthesized images. Fujioka et al. [50] applies the pix2pix GAN to improve the image quality of low-count dedicated breast PET images. This is the first study to use pix2pix GAN for dedicated breast PET image synthesis, which is a challenging task due to the high noise and low resolution of dedicated breast PET images. In a similar work by Hosch et al. [54], the framework of image-to-image translation was used to generate synthetic FD PET images from the ultra-low-count PET images and CT images as inputs and employed group convolution to process them separately in the first layer. Fu et al. [65] introduced an innovative GAN architecture known as AIGAN, designed for efficient and accurate reconstruction of both low dose CT and LD PET images. AIGAN uses a combination of three modules: a cascade generator, a dual-scale discriminator, and a multi-scale spatial fusion module. This method enhances the images in stages, first making rough improvements and then refining them with attention-based techniques.

Recently, there have been articles that highlight the Cycle-GAN model as a variation of the GAN framework. Lei et al., [16] used a Cycle-GAN model to accurately predict FD whole-body 18F-FDG PET images using only 1/8th of the FD inputs. In another study [32], in 2020 they used a similar approach incorporating CT images into the network to aid the process of PET image synthesis from LD on a small dataset consisting of 16 patients. Additionally, in 2020, Zhou et al. [25] proposed a supervised deep learning model rooted in Cycle-GAN for the purpose of PET denoising. Ghafari et al. [47] introduced a Cycle-GAN model to generate standard scan-duration PET images from short scan-duration inputs. The authors evaluated model performance on different radiotracers with different scan durations and body mass indexes. They also report that the optimal scan duration level depends on the trade-off between image quality and scan efficiency.

Some other architecture according to our knowledge by Zhou [61] and et al. proposed a federated transfer learning (FTL) framework for LD PET denoising using heterogeneous LD data. The authors mentioned that their method using a UNET network can efficiently utilize heterogeneous LD data without compromising data privacy for achieving superior LD PET denoising performance for different institutions with different LD settings, as compared to previous FL methods. In a different work, Yie et al. [29] applied the Noise2Noise technique, which is a self-supervised method, to remove PET noise. Feng (2020) et al. [31] presented a study using CNN and GAN for PET sinograms denoising and reconstruction respectively.

The architecture chosen for training can be trained with different configurations and input data types. In the following, we review the types of inputs used in the extracted articles including 2D, 2.5D, 3D, multi-channel and multi-modality. According to our findings, there have been several studies conducted on 2D inputs (single-slice or patch) [12, 14, 17, 21, 24, 25, 27, 29, 47, 50, 52, 53, 56, 57, 67, 68]. In these studies, researchers extracted slices or patches from 3D images and treated them separately for training the model. 2.5 dimensional model (multi-slice) involves stacking adjacent slices for incorporating morphologic information [13, 18, 36, 40, 42,43,44, 46, 48, 54, 61, 65]. Studies that train models on a 2.5D multi-slice inputs differ from those utilizing a 3D convolution network. The main difference between 2.5D and 3D inputs is the way in which the data is represented. 3D approach employs the depth-wise operation and occurs when the whole volume is considered as input. 16 studies investigated 3D training approach [15, 16, 19, 20, 22, 23, 32, 34, 37, 41, 45, 49, 51, 55, 62, 66]. Multi-channel input refers to input data with multiple channels, where each channel represents a different aspect of the input. By processing each channel separately before combining them later on, the network can learn to capture unique information from each channel that is relevant to the task at hand. Papers [12, 68] used this technique as input to their model, enabling the network to learn more complex relationships between data features. Additionally, some researchers utilized multi-modality data to provide a complete and effective information for their models. For instance, combining structural information obtained from CT [32, 45, 54, 62, 66] and MRI [12, 17, 20, 36, 40, 42, 52, 56, 57] scans with anatomical/functional information from PET images contributes to better image quality.

The choice of loss function is another critical setting in deep neural networks because it directly affects the performance and quality of the model. Different loss functions prioritize different aspects of the predictions, such as accuracy, smoothness, or sparsity. Among the reviewed articles, the Mean Squared Error (MSE) loss function has been chosen the most [12, 26, 29, 32, 44, 60, 62, 66, 67], while the L1 and L2 functions have only been used in eight studies [13, 15, 17, 20, 27, 39, 48, 57, 58] and five studies [19, 22, 37, 61, 68], respectively. While MSE (as a variant of L2) emphasizes larger errors, The mean absolute error (MAE) loss (as a variant of L1) focuses on the average magnitude of errors by calculating the absolute difference between corresponding pixel values [55]. The Huber loss function combines the benefits of both MSE and MAE by incorporating both squared and absolute differences [45].

The problem of blurry network outputs with the MSE loss function led to the adoption of perceptual loss as a training loss function [21, 31, 56]. This loss function is based on features extracted from a pretrained network, which can better preserve image details compared to the pixel-based MSE loss function. The use of features such as gradient and total variation, with the MSE in the loss function was another method that was used to preserve the edge, structural details, and natural texture [14]. To solve the problems of adversarial learning in relation to hallucinated structures and instability in training as well as synthesizing images of high-visual quality while not matching with clinical interpretations, The optimization loss functions, including pixel-wise L1 loss, structured adversarial loss [49, 50], and task-specific perceptual loss ensure that images generated by the generator closely match the expected value of the features on the intermediate layers of the discriminator [18]. In supervised fashion of Cycle-GANs that LD PET and FD one is paired, four type of losses employed, including adversarial loss, cycle-consistency loss, identity loss, and supervised learning loss [24, 25, 47]. Different combinations of loss functions can be used for different purposes to guide the model to generate images or volumes that are similar to the ground truth data [32, 35, 40, 41, 46, 52, 53, 56, 65].

At the end for validation of models, studies utilized different datasets for validation, including external datasets and cross-validation on the same training dataset.

Evaluation metrics

In order to assess the effectiveness of synthesizing PET images, two methods were employed: quantitative evaluation of image quality and qualitative evaluation of predicted FD images from LD images. Various denoising techniques were utilized to measure image-wise similarity, structural similarity, pixel-wise variability, noise, contrast, colorfulness and signal-to-noise ratio between estimated PET images and their corresponding FDPET images. Studies shows that peak signal to noise ratio (PSNR) was the most popular metrics used for quantitative image evaluation. Other methods include the normalized root mean square error (NRMSE), structural similarity index metrics (SSIM), Normalized mean square error (NMSE), root mean square error (RMSE), Frequency-based blurring measurement (FBM), edge-based blurring measurement (EBM), contrast recovery coefficient (CRC), contrast-to-noise ratio (CNR), signal to noise ratio (SNR). Additionally, mean and maximum standard uptake value (SUVmean and SUVmax) bias were obtained for clinical semi-quantitative evaluation. Several studies have used physician evaluations to clinically assess PET images generated by different models, along with corresponding reference FD and LD PET images.

Discussion

The objective of the reviewed articles is to estimate high-quality, FD PET images from LD PET images by deep learning. This is a challenging problem because the LD PET images have reduced signal-to-noise ratio (SNR) and contrast compared to the FD images. To address this issue, researchers have proposed various models such as CNNs and GANs with varying structures to generate high-quality images from low-quality inputs.

Despite the potential of deep learning-based techniques for estimating FD PET images from LD scans, several challenges still exist to investigated studies. First, the LD data used were randomly under-sampled from the FD PET, instead of using data with true injected LD. Researchers should be further evaluating their models with the actual ultra-low-dose acquisition.

The next challenge to studies is not evaluating the clinical impact or utility of the proposed method, such as its effect on diagnosis, prognosis, or treatment planning, which are important outcomes for PET imaging applications. Deep learning models can produce estimated FD PET images that are quantitatively similar to true FD images, this does not necessarily mean they have equivalent clinical utility and actionability. Thus, rigorous clinical validation studies involving real patients and clinicians are essential before these techniques can be adopted in medical practice. However, most studies evaluated performance using only image-based metrics, without assessing the impact on actual clinical tasks and outcomes.

Another challenge faced by most of the investigated researches, as is usually the case for medical imaging, is the limited number of training data from a single PET system, which may limit the generalizability and robustness of the proposed method to different scanners, protocols, and populations for which measures such as data augmentation methods, cross-validation, slicing and patch-based training, and 2D inputs were explained.

The 2D input of models is often due to the limited amount of training data available and the complexity of the task still presents challenges to research. This can lead to the discontinuous cross-slice estimation issue where the model produces inconsistent or unrealistic results across adjacent slices in the reconstructed image. To mitigate this issue, some researchers have proposed alternative approaches such as 3D GANs or incorporating additional information such as multi-modal imaging data into the model. These methods can help improve the consistency and quality of the reconstructed images.

Another challenge is the limited choice of loss functions used in these models, which can impact the performance and characteristics of the synthesized FD images. Many studies used L1 or MSE losses that do not fully preserve edge and texture details in the synthesized images. Loss functions that incorporated perceptual losses or emphasized edge and texture preservation produced higher-quality images with less blurring and artifacts. Multi-component losses that combined adversarial, perceptual and pixel-wise losses were found to be effective in some CNNs.

LD PET acquisitions offer several clinical benefits. They can detect lesions and assess tumors while cutting radiation exposure, crucial for pediatric cases and frequent scans. Faster LD scans boost clinic throughput, lower costs, and extend PET access. Combining LD PET with MRI or CT unlocks advanced imaging. Deep learning personalizes PET doses for each patient. This innovative approach promises safer, more efficient, and accessible PET imaging. Clinical studies are pending to confirm its effectiveness. Future validation could lead to new clinical standards.

Conclusions

In conclusion, while deep learning-based approaches show early success in synthesizing Full-Dose PET images from Low-Dose scans, further technical advances, larger datasets, improved model evaluation and extensive clinical validation are still required before these techniques can be reliably adopted in clinical practice.

Availability of data and materials

All data generated or analysed during this study are included in this published article.

Abbreviations

- PET/CT:

-

Positron Emission Tomography / Computed Tomography

- FDG:

-

18F-2-fluoro-2-deoxy-D-glucose

- SUV:

-

Standardized Uptake Value

- SNR:

-

Signal to Noise Ratio

- CNR:

-

Contrast to Noise Ratio

- FWHM:

-

Full Width at Half Maximum

- CR:

-

Contrast Recovery

- LD:

-

Low-Dose

- FD:

-

Full-Dose

References

Gillings N. Radiotracers for positron emission tomography imaging. Magn Reson Mater Phys, Biol Med. 2013;26:149–58.

Boellaard R. Standards for PET image acquisition and quantitative data analysis. J Nucl Med. 2009;50(Suppl 1):11S–20S.

Arabi H, Zaidi H. Improvement of image quality in PET using post-reconstruction hybrid spatial-frequency domain filtering. Phys Med Biol. 2018;63(21):215010.

Wallach, D., Lamare, F., Roux, C. and Visvikis, D., 2010, October. Comparison between reconstruction-incorporated super-resolution and super-resolution as a post-processing step for motion correction in PET. In IEEE Nuclear Science Symposuim & Medical Imaging Conference (pp. 2294–2297). IEEE..

Fin L, Bailly P, Daouk J, Meyer ME. A practical way to improve contrast-to-noise ratio and quantitation for statistical-based iterative reconstruction in whole-body PET imaging. Med Phys. 2009;36(7):3072–9.

Li Y. Noise propagation for iterative penalized-likelihood image reconstruction based on Fisher information. Phys Med Biol. 2011;56(4):1083.

Yu, S. and Muhammed, H.H., 2016, October. Comparison of pre-and post-reconstruction denoising approaches in positron emission tomography. In 2016 1st International Conference on Biomedical Engineering (IBIOMED) (pp. 1–6). IEEE..

Riddell C, Carson RE, Carrasquillo JA, Libutti SK, Danforth DN, Whatley M, Bacharach SL. Noise reduction in oncology FDG PET images by iterative reconstruction: a quantitative assessment. J Nucl Med. 2001;42(9):1316–23.

Akamatsu G, Ishikawa K, Mitsumoto K, Taniguchi T, Ohya N, Baba S, Abe K, Sasaki M. Improvement in PET/CT image quality with a combination of point-spread function and time-of-flight in relation to reconstruction parameters. J Nucl Med. 2012;53(11):1716–22.

Moher D, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Mongan J, Moy L, Kahn CE Jr. Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell. 2020;2(2):e200029.

Xiang L, et al. Deep auto-context convolutional neural networks for standard-dose PET image estimation from low-dose PET/MRI. Neurocomputing. 2017;267:406–16.

Xu, J., et al., 200x low-dose PET reconstruction using deep learning. arXiv preprint arXiv:1712.04119, 2017.

Kaplan S, Zhu Y-M. Full-dose PET image estimation from low-dose PET image using deep learning: a pilot study. J Digit Imaging. 2019;32(5):773–8.

Wang Y, et al. 3D conditional generative adversarial networks for high-quality PET image estimation at low dose. Neuroimage. 2018;174:550–62.

Lei Y, et al. Whole-body PET estimation from low count statistics using cycle-consistent generative adversarial networks. Phys Med Biol. 2019;64(21):215017.

Chen KT, et al. Ultra–low-dose 18F-florbetaben amyloid PET imaging using deep learning with multi-contrast MRI inputs. Radiology. 2019;290(3):649–56.

Ouyang J, et al. Ultra-low-dose PET reconstruction using generative adversarial network with feature matching and task-specific perceptual loss. Med Phys. 2019;46(8):3555–64.

Lu W, et al. An investigation of quantitative accuracy for deep learning based denoising in oncological PET. Phys Med Biol. 2019;64(16):165019.

Wang Y, et al. 3D auto-context-based locality adaptive multi-modality GANs for PET synthesis. IEEE Trans Med Imaging. 2018;38(6):1328–39.

Gong K, et al. PET image denoising using a deep neural network through fine tuning. IEEE Transactions on Radiation and Plasma Medical Sciences. 2018;3(2):153–61.

Cui J, et al. PET image denoising using unsupervised deep learning. Eur J Nucl Med Mol Imaging. 2019;46:2780–9.

Gong Y, et al. Parameter-transferred Wasserstein generative adversarial network (PT-WGAN) for low-dose PET image denoising. IEEE transactions on radiation and plasma medical sciences. 2020;5(2):213–23.

Zhao K, et al. Study of low-dose PET image recovery using supervised learning with CycleGAN. PLoS ONE. 2020;15(9):e0238455.

Zhou L, et al. Supervised learning with cyclegan for low-dose FDG PET image denoising. Med Image Anal. 2020;65:101770.

Sanaat A, et al. Projection space implementation of deep learning–guided low-dose brain PET imaging improves performance over implementation in image space. J Nucl Med. 2020;61(9):1388–96.

Spuhler K, et al. Full-count PET recovery from low-count image using a dilated convolutional neural network. Med Phys. 2020;47(10):4928–38.

Chen KT, et al. Generalization of deep learning models for ultra-low-count amyloid PET/MRI using transfer learning. Eur J Nucl Med Mol Imaging. 2020;47:2998–3007.

Yie SY, et al. Self-supervised PET denoising. Nucl Med Mol Imaging. 2020;54:299–304.

Mehranian A, Reader AJ. Model-based deep learning PET image reconstruction using forward–backward splitting expectation–maximization. IEEE transactions on radiation and plasma medical sciences. 2020;5(1):54–64.

Feng, Q. and H. Liu. Rethinking PET image reconstruction: ultra-low-dose, sinogram and deep learning. in Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part VII 23. 2020. Springer.

Lei, Y., et al. Low dose PET imaging with CT-aided cycle-consistent adversarial networks. in Medical Imaging 2020: Physics of Medical Imaging. 2020. SPIE.

Sanaat A, et al. Deep learning-assisted ultra-fast/low-dose whole-body PET/CT imaging. Eur J Nucl Med Mol Imaging. 2021;48:2405–15.

Zhou B, et al. MDPET: a unified motion correction and denoising adversarial network for low-dose gated PET. IEEE Trans Med Imaging. 2021;40(11):3154–64.

Hu Z, et al. DPIR-Net: Direct PET image reconstruction based on the Wasserstein generative adversarial network. IEEE Transactions on Radiation and Plasma Medical Sciences. 2020;5(1):35–43.

Wang Y-R, et al. Artificial intelligence enables whole-body positron emission tomography scans with minimal radiation exposure. Eur J Nucl Med Mol Imaging. 2021;48:2771–81.

Liu H, et al. PET image denoising using a deep-learning method for extremely obese patients. IEEE Transactions on Radiation and Plasma Medical Sciences. 2021;6(7):766–70.

Xue H, et al. LCPR-Net: low-count PET image reconstruction using the domain transform and cycle-consistent generative adversarial networks. Quant Imaging Med Surg. 2021;11(2):749.

Chen KT, et al. True ultra-low-dose amyloid PET/MRI enhanced with deep learning for clinical interpretation. Eur J Nucl Med Mol Imaging. 2021;48:2416–25.

Sudarshan VP, et al. Towards lower-dose pet using physics-based uncertainty-aware multimodal learning with robustness to out-of-distribution data. Med Image Anal. 2021;73:102187.

Xue, S., et al., A cross-scanner and cross-tracer deep learning method for the recovery of standard-dose imaging quality from low-dose PET. European journal of nuclear medicine and molecular imaging, 2021: p. 1–14.

Theruvath AJ, et al. Validation of deep learning–based augmentation for reduced 18F-FDG dose for PET/MRI in children and young adults with lymphoma. Radiol Artif Intell. 2021;3(6):e200232.

Chaudhari AS, et al. Low-count whole-body PET with deep learning in a multicenter and externally validated study. NPJ digital medicine. 2021;4(1):127.

Park CJ, et al. Initial experience with low-dose 18F-fluorodeoxyglucose positron emission tomography/magnetic resonance imaging with deep learning enhancement. J Comput Assist Tomogr. 2021;45(4):637.

Ladefoged CN, et al. Low-dose PET image noise reduction using deep learning: application to cardiac viability FDG imaging in patients with ischemic heart disease. Phys Med Biol. 2021;66(5):054003.

Peng Z, et al. Feasibility evaluation of PET scan-time reduction for diagnosing amyloid-β levels in Alzheimer’s disease patients using a deep-learning-based denoising algorithm. Comput Biol Med. 2021;138:104919.

Ghafari A, et al. Generation of 18F-FDG PET standard scan images from short scans using cycle-consistent generative adversarial network. Phys Med Biol. 2022;67(21):215005.

Xing Y, et al. Deep learning-assisted PET imaging achieves fast scan/low-dose examination. EJNMMI physics. 2022;9(1):1–17.

Zhou Y, et al. 3D segmentation guided style-based generative adversarial networks for pet synthesis. IEEE Trans Med Imaging. 2022;41(8):2092–104.

Fujioka T, et al. Proposal to Improve the Image Quality of Short-Acquisition Time-Dedicated Breast Positron Emission Tomography Using the Pix2pix Generative Adversarial Network. Diagnostics. 2022;12(12):3114.

de Vries BM, et al. 3D Convolutional Neural Network-Based Denoising of Low-Count Whole-Body 18F-Fluorodeoxyglucose and 89Zr-Rituximab PET Scans. Diagnostics. 2022;12(3):596.

Sun H, et al. High-quality PET image synthesis from ultra-low-dose PET/MRI using bi-task deep learning. Quant Imaging Med Surg. 2022;12(12):5326.

Luo Y, et al. Adaptive rectification based adversarial network with spectrum constraint for high-quality PET image synthesis. Med Image Anal. 2022;77:102335.

Hosch R, et al. Artificial intelligence guided enhancement of digital PET: scans as fast as CT? Eur J Nucl Med Mol Imaging. 2022;49(13):4503–15.

Daveau RS, et al. Deep learning based low-activity PET reconstruction of [11C] PiB and [18F] FE-PE2I in neurodegenerative disorders. Neuroimage. 2022;259:119412.

Deng F, et al. Low-Dose 68 Ga-PSMA Prostate PET/MRI Imaging Using Deep Learning Based on MRI Priors. Front Oncol. 2022;11:818329.

Zhang L, et al. Spatial adaptive and transformer fusion network (STFNet) for low-count PET blind denoising with MRI. Med Phys. 2022;49(1):343–56.

Wang T, et al. Deep progressive learning achieves whole-body low-dose 18F-FDG PET imaging. EJNMMI physics. 2022;9(1):82.

Yoshimura T, et al. Medical radiation exposure reduction in PET via super-resolution deep learning model. Diagnostics. 2022;12(4):872.

Hu, R. and H. Liu. TransEM: Residual swin-transformer based regularized PET image reconstruction. in International Conference on Medical Image Computing and Computer-Assisted Intervention. 2022. Springer.

Zhou B, et al. Federated transfer learning for low-dose pet denoising: a pilot study with simulated heterogeneous data. IEEE Transactions on Radiation and Plasma Medical Sciences. 2022;7(3):284–95.

Zhang, J., et al., Hierarchical Organ-Aware Total-Body Standard-Dose PET Reconstruction From Low-Dose PET and CT Images. IEEE Transactions on Neural Networks and Learning Systems, 2023.

Jiang, C., et al., Semi-supervised Standard-dose PET Image Generation via Region-adaptive Normalization and Structural Consistency Constraint. IEEE Transactions on Medical Imaging, 2023.

Onishi, Y., et al., Self-Supervised Pre-Training for Deep Image Prior-Based Robust PET Image Denoising. IEEE Transactions on Radiation and Plasma Medical Sciences, 2023.

Fu Y, et al. AIGAN: Attention–encoding Integrated Generative Adversarial Network for the reconstruction of low-dose CT and low-dose PET images. Med Image Anal. 2023;86:102787.

Hu Y, et al. Comparative study of the quantitative accuracy of oncological PET imaging based on deep learning methods. Quant Imaging Med Surg. 2023;13(6):3760.

Liu K, et al. A Lightweight Low-dose PET Image Super-resolution Reconstruction Method based on Convolutional Neural Network. Current Medical Imaging. 2023;19(12):1427–35.

Sanaei, B., R. Faghihi, and H. Arabi, Employing Multiple Low-Dose PET Images (at Different Dose Levels) as Prior Knowledge to Predict Standard-Dose PET Images. J Digit Imaging, 2023: p. 1–9.

Jang, S.-I., et al., Spach Transformer: Spatial and channel-wise transformer based on local and global self-attentions for PET image denoising. IEEE transactions on medical imaging, 2023.

Acknowledgements

We acknowledge the contribution of artificial intelligence in improving the grammatical precision and overall writing excellence of this article.

Funding

This study is supported by a grant received from the Iran University of Medial Science by number of 24177.

Author information

Authors and Affiliations

Contributions

NG.S. and P.S. conceived the research idea and developed the research methodology. NG.S.,A.G, N.S and P.S authored the main manuscript. All authors analyzed and validated the data and reviewed the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This research project has been conducted in accordance with the ethical code IR.IUMS.REC.1401.712.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Seyyedi, N., Ghafari, A., Seyyedi, N. et al. Deep learning-based techniques for estimating high-quality full-dose positron emission tomography images from low-dose scans: a systematic review. BMC Med Imaging 24, 238 (2024). https://doi.org/10.1186/s12880-024-01417-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-024-01417-y