- Research

- Open access

- Published:

An effective no-reference image quality index prediction with a hybrid Artificial Intelligence approach for denoised MRI images

BMC Medical Imaging volume 24, Article number: 208 (2024)

Abstract

As the quantity and significance of digital pictures in the medical industry continue to increase, Image Quality Assessment (IQA) has recently become a prevalent subject in the research community. Due to the wide range of distortions that Magnetic Resonance Images (MRI) can experience and the wide variety of information they contain, No-Reference Image Quality Assessment (NR-IQA) has always been a challenging study issue. In an attempt to address this issue, a novel hybrid Artificial Intelligence (AI) is proposed to analyze NR-IQ in massive MRI data. First, the features from the denoised MRI images are extracted using the gray level run length matrix (GLRLM) and EfficientNet B7 algorithm. Next, the Multi-Objective Reptile Search Algorithm (MRSA) was proposed for optimal feature vector selection. Then, the Self-evolving Deep Belief Fuzzy Neural network (SDBFN) algorithm was proposed for the effective NR-IQ analysis. The implementation of this research is executed using MATLAB software. The simulation results are compared with the various conventional methods in terms of correlation coefficient (PLCC), Root Mean Square Error (RMSE), Spearman Rank Order Correlation Coefficient (SROCC) and Kendall Rank Order Correlation Coefficient (KROCC), and Mean Absolute Error (MAE). In addition, our proposed approach yielded a quality number approximately we achieved significant 20% improvement than existing methods, with the PLCC parameter showing a notable increase compared to current techniques. Moreover, the RMSE number decreased by 12% when compared to existing methods. Graphical representations indicated mean MAE values of 0.02 for MRI knee dataset, 0.09 for MRI brain dataset, and 0.098 for MRI breast dataset, showcasing significantly lower MAE values compared to the baseline models.

Introduction

In recent years, digital photos, remote sensing, satellite imagery, and medical imaging have all advanced significantly. This growth has resulted in advancements in acquisition systems, including smartphones, digital cameras, and multiple other imaging devices [1]. Therefore, users can now record, store, and share high-resolution images thanks to new acquisition devices. Nevertheless, the blurring, motion artifacts, ambient disruptions, etc., degrade the real-time images [2]. As a result, evaluating an image's clarity is essential and still difficult for academics. An image quality assessment (IQA) algorithm that can correctly assess and forecast the quality of an image similar to the opinions of humans has been developed over the past ten years [3]. The final consumers are given a high-quality encounter by these models. IQA can generally be divided into two groups. The first strategy is a subjective one that relies on human judgments and is very precise. Subjective testing must be integrated into the system as an optimization metric because it is time-consuming and costly [4, 5]. It is optimal to use objective quality measurement techniques for fast system performance evaluation and optimization because they typically use subjective evaluation information as the basis for training. These models naturally forecast the picture quality in connection to how people perceive the images [6].

Subjective evaluation findings are typically the ground truth for teaching objective quality evaluation methods. Under how people perceive things, these models naturally anticipate the image's clarity. The different full-reference (FR) and reduced-reference (RR-IQA) methods have been discussed in the literature, including SSIM [7], PSNR [8], FSIM [9], MSSIM [10], and VIF [11]. However, these methods need to be revised for applications that require real-time processing. This is due to the impossibility of accessing the original image in real time under all imaging circumstances [12, 13].

Therefore, no-reference (NR) methods, also known as blind IQA models, have been the focus of most studies. The quality of an image can be assessed randomly using various criteria. Some of the factors used for NR-IQA include anisotropy [14], wavelet [15], discrete cosine transform (DCT) [16], and Gabor filtering [17]. These methods can evaluate the test picture's quality without previous knowledge of the reference image. Real-time apps are more than insufficient for computational time. In recent years, deep learning has overtaken the fields of computer vision and picture analysis. The application of objective IQA has remained consistent with this pattern. Different deep convolutional networks were used to estimate perceptual picture quality, including VGG16 [18], InceptionV3 [19], ResNet50 [20], DenseNet201 [21], InceptionResNetV2 [22], and NASNetMobile [23]. However, traditional techniques require a lot of computation and take longer to train. Recently, MRI analysis has played an important role in the modern health field. The scanner takes images from different body parts like joints, legs, head, abdomen etc. Due to this reason, the MRI scanners of different images are taken in this research for quality assessment. Consequently, various methods are used to analyze the MRI scanner image quality, but the finest outcomes still need to be achieved [24, 25]. Therefore, a novel intelligent methodology is proposed to estimate the NR-IQA model accurately.

In our study, we have integrated features extracted from EfficientNet-B7 into our Non-Reference Image Quality Assessment (NR-IQA) framework to significantly enhance the accuracy and robustness of image quality evaluation. EfficientNet-B7, renowned for its exceptional performance in image classification tasks, offers a valuable resource of hierarchical feature representations acquired from a wide spectrum of images. These features are particularly beneficial for NR-IQA applications as they enable the extraction of intricate details and semantic information across different scales, essential for comprehensive image quality assessment.By incorporating EfficientNet-B7 features, our methodology gains several advantages. Firstly, it excels in hierarchical feature extraction, capturing detailed nuances and semantic context crucial for assessing image quality comprehensively. This capability is pivotal in discerning perceptually important characteristics from less relevant ones, thereby enhancing the precision and depth of our quality assessment framework. Moreover, leveraging transfer learning with pre-trained weights from EfficientNet-B7 empowers our NR-IQA model to generalize effectively across diverse image types, even in scenarios where reference data is limited. This transfer learning capability not only enhances the model's adaptability but also contributes to its stability and reliability across varying image qualities and conditions. The discriminative power of EfficientNet-B7 features further elevates our approach by enabling more accurate differentiation between different levels of image quality. This enhanced discriminative ability ensures that our framework maintains robust performance consistency, crucial for its practical application in real-world scenarios. Overall, by integrating EfficientNet-B7 features, our methodology represents a significant advancement in NR-IQA. Beyond improving accuracy, it establishes a scalable solution capable of handling diverse image datasets with greater precision and reliability. This integration underscores the potential of leveraging advanced classification models to enrich image quality assessment methodologies, setting a foundation for more nuanced and effective evaluations in the field of image processing.

The following are the significant contributions of the study:

-

The proposed bilateral incorporated Wiener filter enhances the Wiener filter by incorporating bilateral filtering, which preserves edge information in MRI images. This improves image quality and reliability for clinical diagnosis and research, surpassing the performance of traditional Wiener filtering techniques.

-

The work introduces the GLRLM and EfficientNet B7 algorithms for extracting features from denoised MRI images. GLRLM analyzes texture by characterizing consecutive pixel runs, while EfficientNet B7 is a deep learning model efficient in image classification. Together, they enable effective analysis and feature extraction for improved MRI interpretation.

-

The Multi-Objective Reptile Search Algorithm (MRSA) is introduced for selecting the best feature vector. MRSA is designed to handle multiple objectives simultaneously, making it suitable for feature selection tasks where multiple criteria need to be optimized. By using MRSA, the proposed method can effectively identify the most relevant features from a given feature set, thereby improving the efficiency and effectiveness of the feature selection process.

-

The self-evolving deep belief fuzzy neural network (SDBFN) method is recommended for a successful study of NR-IQ. SDBFN combines the capabilities of deep belief networks and fuzzy logic to effectively model and analyze non-reference image quality (NR-IQ). This method is particularly suitable for tasks where reference images for comparison are not available, such as in video quality assessment or image enhancement. By using SDBFN, researchers can improve the quality and reliability of NR-IQ studies, leading to more robust and effective image quality assessment techniques.

-

The comprehensive evaluation of earlier designs using large IQA datasets as benchmarks is crucial for advancing image processing. It involves testing existing methods across diverse images to identify their strengths and weaknesses, leading to improved algorithms and standardized benchmarking procedures.

The remainder of the article is arranged as follows: A summary of the current approaches is provided in Related work section. Proposed methodology section presents the proposed NR-IQ evaluation methodology. Finally, Result and discussion section presents an overview of all the tests and key findings, and Conclusion section explains the conclusions reached.

Related work

Many NR-IQA techniques have been written about in journals in recent years. Distinction-specific and general NR-IQA algorithms can be categorized into multiple categories. An NR-IQA model that is rotation-invariant and numerically effective was introduced by Rajevenceltha, J., and Gaidhane [26]. The hyper-smoothing LBP (H-LBP), additionally referred to as the modified LBP and Laplacian of H-LBP (LH-LBP), symbolizes the framework of the image. Support vector regression (SVR) is used in the image quality forecast algorithm to gauge the quality of the image. Varga, D. [23] developed a new, deep learning-based NR-IQA design. It is built on the decision merging of numerous image quality ratings from various convolutional neural networks. This method's primary premise is that various networks can describe real image distortions more accurately than an individual network. Furthermore, relevance and cross-database analyses have supported these findings.

Bagade, J.V. et al. [27] suggested a hybrid method built on machine learning for assessing NR quality. The feed-forward neural network is given information in the form of blockiness-based parameters, additional statistical parameters, and NSS-based characteristics. The quality value is predicted by the backpropagation training method. This number and the differential mean opinion score are linked. (DMOS). The above factors are also used as classification data for support vector machines. This classifier's measured success is 89%. Obuchowicz R. et al. [28] presented a new BIQA technique for assessing MR images. It has been found that the effectiveness of non-maximum suppression (NMS) filtering is highly influenced by the perceived quality of the incoming picture. The entropy of a series of extrema numbers determined with the thresholded NMS effectively describes the quality. The introduced measure works significantly better than similar techniques by a wide margin because it corresponds with human scores better, according to the comprehensive experimental assessment of the BIQA methods.

The Self-evolving Deep Belief Fuzzy Neural Network (SDBFN) integrates deep belief networks (DBNs) and fuzzy neural networks (FNNs), combining deep learning's hierarchical data representation with fuzzy logic's handling of uncertainties and non-linearities. This hybrid approach excels in modeling complex relationships within image data for Image Quality Assessment (IQA), capturing both high-level semantic features and low-level perceptual details crucial for accurate evaluations. Recent studies have demonstrated SDBFN's superiority over traditional methods in IQA tasks, especially in non-reference scenarios, due to its adaptive learning and robust feature extraction capabilities. Its integration of fuzzy logic enhances its ability to address subjective aspects of image quality perception, improving metric accuracy and interpretability. Overall, SDBFN represents a significant advancement in IQA, promising more reliable and comprehensive image quality evaluation methodologies.

When employing image quality statistics, a hybrid deep neural network (DNN) is suggested to record image features related to image quality; this method ensures that important image features can be used to forecast image quality. Chan, K.Y., et al. [29]. Additionally, a tree-based classifier called geometric semantic genetic programming is suggested to carry out the general forecasts by fusing CNN predictions and image characteristics. Although, at the same time, this method is more straightforward than completely connected networks, it is still capable of modeling nonlinear image qualities. A deep design with a region proposal network (RPN) for blind natural-scene and screen-content-based image quality evaluation, known as DeepRPN-BIQA, was suggested by ur Rehman, M. et al. [30]. Critical areas are recovered by moving the network across the extracted feature map from deep networks, such as VGGNet and ResNet, using the texture and borders of the images. The area of interest comprises all regions suggested (RP) that share more than 60% of their boundaries. (ROI). Each ROI receives a local quality score, and the overall quality score is calculated by averaging all of the native quality values.

A neural network-based BIQA utilizing two-sided pseudo reference (TSPR) images was introduced by Hu, J., Wang, X, et al. [31]. Following the extraction of the bilateral distance plots between the TSPR pictures and the original warped images, the regression neural network models how the human brain interprets information. The experiment findings show the algorithm's effectiveness and reliability, providing better performance than that of cutting-edge NR techniques.

A study by [35] presents a new diffeomorphism-based approach for non-rigid medical image registration across modalities like MRI, CT, and 3D rotational angiography, using a non-stationary velocity field and a similarity energy function. The researchers invested in optimizing this framework to address deformation challenges and demonstrated its promising quality, with MSE of 1.3136, NCC of 0.9962, SS of 0.9897, MI of 0.883, FSIM of 0.9922, and MAE of 1.52 ± 2.09, evaluated on both private and public datasets [35]. Tracking instruments in laparoscopic and robotic surgeries can enhance focus and reduce errors, but remains challenging due to motion blur, noise, lack of texture, and occlusion, with existing methods being time-consuming and less accurate for high-volume data [36]. This paper presents a semi-automatic algorithm to enhance contrast in low-contrast MRI and reconstruct the left ventricle surface using a new graph cut method, showing promising results with an average Dice coefficient of 92.4% and a Hausdorff distance of 2.94 mm [37]. This work introduces a comprehensive pipeline for cerebral blood flow simulation and real-time visualization, addressing the critical clinical challenges in accurate detection and effective therapy for cerebral aneurysms [38]. This article presents a real-time, cost-efficient platform for HemeLB simulation and visualization of cerebral aneurysms, with the Jetson TX1 outperforming the Zynq SoC by a factor of 19 in site updates per second [39]. This paper presents efficient hardware architectures for lattice Boltzmann simulations on a Zynq SoC, achieving a 52-fold speedup over a dual-core ARM processor implementation [40]. Several studies have advanced medical image segmentation techniques using innovative neural network architectures and methodologies. ConvUNeXt, incorporating ConvNeXt-inspired features into UNet, demonstrated enhanced segmentation performance with reduced parameters [41]. Res-PAC-UNet focused on liver CT segmentation, achieving high accuracy using Pyramid Atrous Convolutions and a fixed-width residual UNet backbone, showcasing a Dice similarity coefficient of 0.958 ± 0.015 with minimal parameters [42]. Another study proposed an efficient encoder-decoder DCNN model integrating ResNet and DenseNet features, surpassing existing methods in accuracy while maintaining fewer parameters [43]. For ultrasound image segmentation, a neural network-based approach using Pyramid Scene Parsing emphasized noise removal, achieving a Dice coefficient of 0.913 ± 0.024 and real-time processing capability of 37 frames per second [44]. CoTr introduced a novel framework combining CNNs with an efficient Deformable Transformer for 3D medical image segmentation, significantly enhancing performance on complex datasets [45]. Segmentation methodologies for hepatocellular carcinoma imaging were categorized by their clinical utility in surgical and radiological interventions, highlighting their impact on diagnosis and treatment outcomes [46]. Additionally, the impact of CADe/CADx systems on post-hepatic resection patient health was studied through simulations varying tumor characteristics, providing insights into their potential clinical benefits [47]. A systematic review on immediate post-ablation response in malignant hepatic tumors using fusion imaging systems evaluated clinical outcomes and technical metrics, contributing valuable insights into treatment efficacy [48]. Deep learning techniques for ultrasound image segmentation over the past five years were critically reviewed, focusing on neural network architectures tailored for handling low-contrast and blurry ultrasound images [49]. Lastly, GAN methodologies for synthesizing elastograms from B-mode ultrasound images were reviewed, emphasizing improvements in diagnostic quality and discussing challenges for pocket ultrasound applications [50].

Previous research shows that computational algorithms, such as KNN, SVC, and MLP, can effectively predict drug permeability across the placental barrier with high quality, providing an alternative to animal testing [51, 52]. Research also shows that the tree-based ensemble models like random forest and extra trees, combined with mol2vec fingerprints and SMOTE, achieve high quality in predicting blood–brain barrier permeability for drug repurposing in neurological diseases [53]. Reviews [54] shows that methodologies for estimating age and gender from ECG data, highlighting that elevated ECG age is linked to cardiovascular diseases and mortality, and discusses improvements and clinical applications of these estimations. This study introduces the MEFood dataset for Middle Eastern food recognition, benchmarks various computer vision models, and finds EfficientNet-V2 to excel in performance and resource efficiency, while providing comprehensive analysis and insights [55]. This study evaluates neural networks for ECG-derived age estimation, addressing ECG acquisition parameters, ethnic diversity, and signal distortions, finding that fine-tuning pre-trained networks and employing random cropping schemes enhance performance and reduce data requirements.

The summary of the literature is explained in Table 1.

The literature study reveals that the aforementioned methods address individual bias. Images may include multiple anomalies. The quality of an image cannot be determined by looking at a single artifact because the picture may contain a variety of blur, blockiness, and buzzing artifacts. It is necessary to establish a basic framework.

Proposed methodology

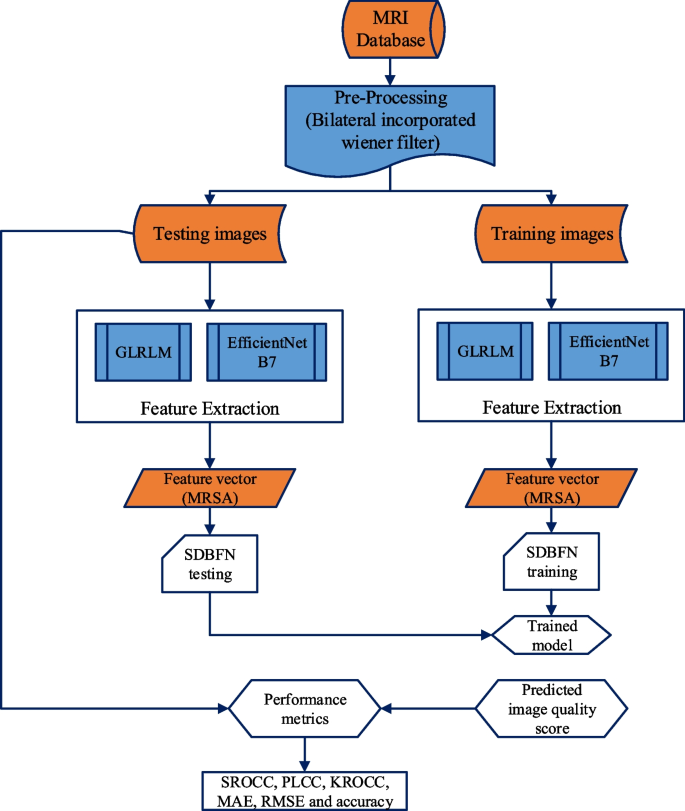

This article proposes a hybrid AI method for predicting image characteristics that combines optimization with neuro-fuzzy assessments and image features extracted from distortion measures. The proposed strategy is highly inspirational for incorporating quality measures into hybrid AI and handling the visual comprehension of poor-quality images. This suggested technique also paves the way for categorizing the performance of both high- and low-quality images. The proposed NR-IQA algorithm's block layout is shown in Fig. 1.

The MRI images are gathered from the public database and filtered the images with varying noise levels using a bilateral incorporated wiener filter. To extract the features from the pre-processed images using GLRLM and EfficientNet B7 algorithms are used. The MRSA is provided on the feature vector that estimates the extraction's optimal features. The total image quality assessment value is calculated, and the image's most important estimated characteristics are added together. Furthermore, SDBFN is applied to analyze the quality of images as low or high. The parameters of SDBFN are optimized using the MRSA algorithm.

Pre-processing

The pre-processing step is the initial stage for removing the unwanted noise from the input images. The bilateral incorporated wiener filtering is an efficient edge-preserving smoothing technique that softens the image while maintaining the clarity of its borders. It is done by merging the two Gaussian filters. While the second filter works in the intensity domain, the first filter operates in the geographic domain. A weighted total of the input is what this non-linear filter produces as its output. The result of the bilateral filter is explained as follows for a \(n\) pixel in (1):

where \(K(n)=\sum\nolimits_{r\in\phi}{I_ra(\left\|r-n)b\right\|(\left\|I_r-I_n\right\|})\) is denoted as "normalization". \(I\) represents the initial input picture that needs to be filtered; \(n\) represents the coordinates of the current pixel that needs to be filtered; A Gaussian function can be used as the range kernel \(b\), which smoothes differences in values, and the spatial (or domain) kernel \(a\), which smoothes differences in coordinates (this function can be a Gaussian function). Additionally, image evaluation employs the Weiner filter. When the contrast is strong, the filter smooths very little. The filter will flatten the picture more when there is a lot of contrast. Equation (2) is used to describe how the Weiner filter works

where \(\sigma^2=\frac1{{}_S2}\sum_{r=1}^S\sum_{n=1}^S{}_a2\left(r,n\right)-\frac1{{}_S2}\sum_{r=1}^S\sum_{n=1}^Sf\left(r,n\right)\). One may determine the noise activity's power spectrum density by using the Fourier series to analyze the noise synchrony \(P(r,n)\). These combine filters perform better than other image-enhancing filters.

Feature extraction

In this section, the GLRLM and EfficientNet B7 is used to extract the features from the pre-processed image. Moreover, the contrast, edge, sharpness and other significant features are extracted by the EfficientNet B7 algorithm. The selection of six features from the Gray-Level Run Length Matrix (GLRLM) based on their direct relevance to the task at hand, such as image classification or quality assessment, ensuring they capture essential aspects of image content. These features are chosen for their high discriminative power, effectively differentiating between various textures or patterns in images and thereby enhancing the accuracy and robustness of the analysis. The selection also prioritizes computational efficiency by limiting the feature set to six, ensuring feasibility within practical time constraints while minimizing redundancy and potential overfitting.

GLRLM features

The statistic of interest in the Gray Level Run Length Matrix (GLRLM) is the number of combinations of gray level values and their duration of lines in a particular Region of Interest (ROI). Only seven GLRLM characteristics, known as the Short Run Emphasis (SRE), Low Gray Level Run Emphasis (LGLRE), Long Run Emphasis (LRE), Run Length Non-Uniformity (RLN), Gray Level Non-Uniformity (GLN), Run Percentage (RP), and High Gray Level Run Emphasis (HGRE), will be extracted in this study. The features are explained as follows in (3)-(9):

According to their appearance and historical growth, there is no issue that all of the characteristics listed above fall into the same group. Therefore, in this piece, we are interested in uniformly extracting these 7 characteristics.

EfficientNet B7 features

The EfficientNet B7 is the advanced method of convolutional neural network types. Here, the ordinary Rectifier Linear Unit (ReLu) is replaced by a novel activation function dubbed the Leaky ReLu activation function in the EfficientNet. Instead of defining the ReLU activation function to be 0 for negative input \((y)\) values, we define it as an incredibly small linear component of \(y\). Equation (10) provides the solution for this activation function.

This function returns x if the input is positive, but it only returns a very small amount, 0.01 times x, if the input is negative. Because of this, it also produces negative numbers. This small modification results in the gradient of the left side of the curve having a non-zero number. There wouldn't be any more failed neurons there as a consequence. Finding a matrix to map out the relationships between the various scaling parameters of the baseline network is the first step in the compound scaling approach under a fixed resource constraint. EfficientNet used the MBConv bottleneck, a crucial building block first introduced in MobileNet V2, but it did so much more frequently than MobileNetV2 due to its larger "Floating point operations per second" (FLOPS) funding. Blocks in MBConv are composed of a layer that increases and then shrinks the channels, whereas direct links are used between constraints with significantly fewer channels than growth layers. As the layers are designed separately, the computation is slowed down by a ratio of \(L_{2}\), where \(L\) is the kernel size, which stands for the 2D convolution window's width and height. Equation (11) gives the following mathematical definition of EfficientNet:

where \(T_{y}\) times in the range of \(y\), \(C_{y}\) stands for the layer norm. The form input in the tensor of \(X\) with respect to the layer x is represented by \(\left( {P_{y} ,Q_{y} ,R_{y} } \right)\). The image features switch from 256 × 256 to 224 × 224. The layers have to scale with a proportional ratio adjusted with the following algorithm to increase the model accuracy as in (12)-(13):

In (12), a, b, and c stand in for height, width, and resolution. Equation (13) displays a number of model levels along with a description of the factors. Further, the features from GLRLM and EfficientNet B7 are combined for best feature selection function.

MRSA for feature selection

The MRSA is a metaheuristic optimization technique inspired by the natural behavior of reptiles. It operates by simulating the hunting behavior of multiple reptiles in search of prey. In MRSA, a population of candidate solutions, represented as potential prey locations, undergoes iterative improvement through a series of local and global search strategies. Local search mechanisms mimic the movement patterns of individual reptiles exploring their immediate surroundings, aiming to exploit promising regions of the search space. Global search mechanisms emulate collective behaviors such as group hunting or migration, facilitating exploration of diverse regions to avoid local optima. By dynamically balancing exploration and exploitation, MRSA enhances convergence towards optimal solutions across various types of optimization problems. Its effectiveness lies in its ability to adaptively adjust search intensities based on problem characteristics, making it suitable for complex optimization challenges where both precision and robustness are essential.

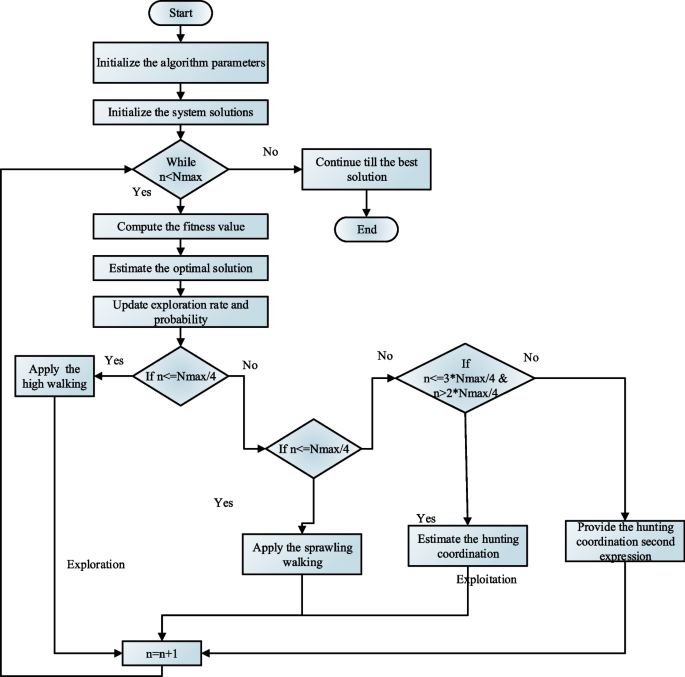

The flowchart of MRSA is illustrated in Fig. 2. The swarm-based optimization method known as the social behavior, foraging tactics, and surrounding style of crocodiles inspired MRSA. The first happens as an encirclement strategy during the investigation stage, and the second occurs as a hunting technique during the exploitation stage. Before the iteration starts, the extracted characteristics are initially applied to the collection of possible answers. It employs an arbitrarily produced strategy given by (14).

where \(d\) represents the crocodile's size, \(M\) represents all crocodiles, and \(f_{j,k}\) represents the \(j^{th}\) crocodile's \(k^{th}\) overall area. Equation (14) produces one of many optimum solutions at random given by (15).

where \(Upper\,P\) and \(lower\,P\) are the optimization method's upper and lower limits, and \(rand\) is an arbitrary integer.

Feature exploration

Encircling activity is what sets MRSA's global seeking apart. Crocodiles roam lofty and widespread during the time of the global hunt. The search strategy in MRSA is determined by the amount of active rounds. When \(n \le 0.25\,N_{\max }\), MRSA performs an elevated stroll. When \(n \le 0.5N_{\max }\) and \(n > 0.25TN_{\max }\), the MRSA moves in a spread. These two actions frequently deter crocodiles from pursuing food. The crocodile will eventually stumble upon the broad area of the intended meal, though, as it is a global scan of the complete solved spatial range. In the interim, make sure the amount can be continuously changed to the next developmental stage. The first two-thirds of the overall number of iterations are commonly all that the process lasts. The specific mathematical formulae for the process are described in (16).

where \(r\) is a random number between 0 and 1, and \(best_{k} (t)\) is the location of the crocodile that is in the best after \(n\) repeats. The symbol \(N_{\max }\) denotes the utmost repetition. Equation (17) identifies the \(j^{th}\) reptile in the \(k^{th}\) dimension as the \(\delta_{j,k}\) generator. The real wording refers to the sensitive parameter b as 0.1 and governs the search precision.

The reduction function, which is used to reduce the examined \(H_{j,k} (n)\) region, is determined using (18).

where \(r_{2}\), and \(r_{2}\) is a random number between 1 and M, \(x_{{r_{2} ,k}}\) is the \(k^{th}\) size of the crocodile at the given position. The randomly declining chance ratio \(D(n)\), which has a range of 2 to -2, is contained in (19)

where \(q_{j,k}\) is the proportion separating the crocodile in the optimal position from those in the present location, updated as in (20).

where \(M(f_{j} )\) is given in (21) as the crocodile's typical position for \(f_{j}\),

Feature exploitation

In this section, the MRSA search phase is linked to the local representational exploitation's hunting process, which has two strategies: cooperation and collaboration. As soon as the process of encirclement kicks in, the crocodiles almost lock in the location of the intended food, and their hunting strategy will make it easier for them to get there. The MRSA conducts when \(n < 0.75N_{\max }\) and \(n \ge 0.75N_{\max }\) coordinates foraging. When, \(n < N_{\max }\) and \(n \ge 0.75N_{\max }\) the MRSA uses a joint foraging strategy given by (22).

where \(best_{k} (n)\) is the crocodile's preferred position, and \(\delta_{j,k}\) is the \(j^{th}\) crocodile's supervisor in the \(k^{th}\) dimension. With acting \(H_{j,k} (n)\) as the reduction function, the expression is used to shrink the region under investigation. Before choosing a new search strategy, MRSA generates the beginning population at random in the search space based on the number of repetitions. The flowchart of the MRSA method is shown in Fig. 2.

SDBFN algorithm

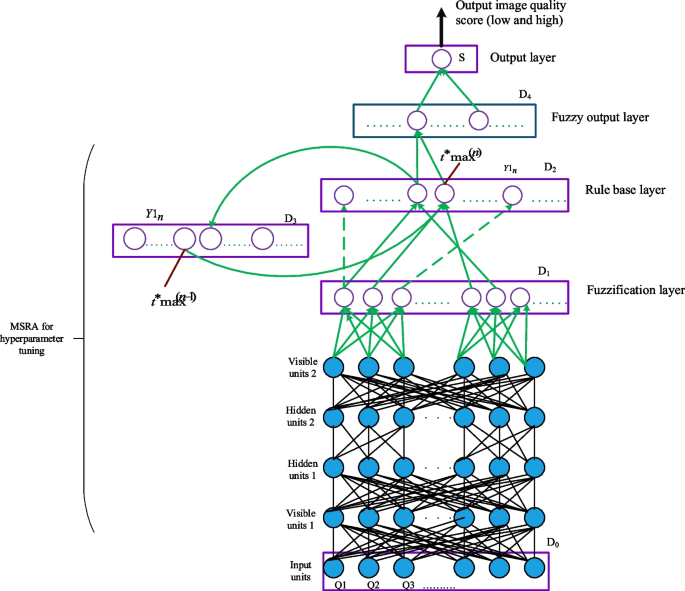

The best features selected from MRSA are given to the SDBFN algorithm. The proposed SDBFN method combines a deep belief network and a fuzzy learning approach. The architecture of the proposed MRSA-based SDBFN model is illustrated in Fig. 3. This model is enclosed with the five-layer system, such as the input layer, fuzzification, rule layer, membership function, and defuzzification. The input layer is the first layer. It sends the incoming data variable to the next stage, where it will be fuzzified. Let \(Q_{m}\) be the vector of input image features. Equation (23) expresses the input and output of the weight vectors.

where, the neuron rule is denoted as \(t^{ * } r\). Set the visible units to a training vector at the beginning. Update the hidden units concurrently with the visible units given by (24):

where, \(\delta\) is the logistic sigmoid function and \(b_{n}\) is the bias of hidden units. Furthermore, update the visible units concurrently with the hidden units using (25),

where \(c_{n}\) is the bias of visible units. This is referred to as the reconstruction step. Given the rebuilt visible units using the same as the concealed portion, update the hidden units concurrently. Execute the weight update using (26),

After a deep belief has been trained, the next deep belief is "stacked" on top of it, using the final learned layer as its input. Next, the new visible layer is set up with a training vector, and the existing weights and biases are used to give values to the units in the learned layers. The above process is then used to teach the new deep belief. Until the intended stopping condition is satisfied, this entire procedure is repeated. Finally, the fuzzification layer is named after a group of spatially arranged neurons that form a fuzzy prediction of the variable indicated by any incoming trained variables. Furthermore, the second layer fuzzifies the inbound data before the third layer gathers it. Equation (27) shows how \(D_{1} (n)\) computes the normalized fuzzy distance between a new fuzzy instance \(Q_{{1f^{ * } }}\) and a previously \(n^{th}\) stored pattern.

where, \(p\) is the p-norm. For p-norms, \(\left\| v \right\|_{w + c} \le \left\| v \right\|_{w}\) for any \(v \in \Re^{n} ,w \ge 1,c \ge 0.\) The activation levels of rule neurons is given by (28),

where,\(Y1_{n} ,e_{n} \in [0,1]\). Furthermore, the third layer is the rule base layer, which contains flexible rule nodes. The nodes reflect the Membership functions, which can be modified during the learning process. Two vectors of bonded weights that are corrected by a mixed supervised/unsupervised learning technique describe rule nodes. The default starting number \(R_{n}^{ * }\) is 0.3. During each \(x\) time increment (see (29) and (30)):

In (29) and (30), the sensitivity level is indicated by \(R_{n}^{ * }\) and \(\mu\) the number of neurons is denoted by \(\mu\). Saturated linear function type, as stated in (31) is used to disseminate the stimulation of the successful neuron.

The fourth layer is also called fuzzy output, and it serves as an example of fuzzy limitations for the output variables. The header is used to verify the data stream in this case. The systems approve the packet if its confidentiality exceeds the cutoff level; otherwise, they refuse it from transmission over the network. The effective neurons' weight vectors \(D_{1}\) and \(D_{2}\) along with error vectors \(\hat{E}_{r}^{ * }\) are changed from (32) to (33)

In equations (32) and (33), \(\kappa_{1}\) and \(\kappa_{2}\) are the fixed learning values. The output layer is also referred to as the fifth layer. The output layer carries out the de-fuzzification procedure and determines the output variable's number value. The following level receives the rule node's maximum action. The suggested MRSA-based SDBFN model is given in Fig. 3.

The hyperparameter (Learning rate, weight, fuzzy rule, etc.) of the SDBFN model is optimized by the MRSA algorithm exploration and exploitation function's fitness function. If the optimal tuning point is achieved, the accurate image quality prediction is observed as a High or Low-quality score.

Performance metrics

The correlation level between expected and ground-truth ratings is used to assess NR-IQA algorithms. The research frequently uses the Pearson linear correlation coefficient (PLCC), Spearman rank order correlation coefficient (SROCC), Mean Absolute Error (MAE), and Kendall rank order correlation coefficient (KROCC) to describe the correlation intensity. The PLCC is used to compute the correlation between two data. The definition of PLCC between two vectors \(p\) and \(q\) of the same length \(N\) is in (34)

where the \(k^{th}\) part of \(p,q\) is indicated by \(p_{{_{k} }}\) and \(q_{{_{k} }}\) respectively. Furthermore, \(\overline{p} = \frac{1}{N}\sum\nolimits_{k = 1}^{N} {p_{k} }\) and \(\overline{q} = \frac{1}{N}\sum\nolimits_{k = 1}^{N} {q_{k} }\). SROCC between these vectors is described as (35)

where \(S_{k}\) denotes the disparity between each rank's two counterparts \(p_{{_{k} }}\) and \(q_{{_{k} }}\). Furthermore, KROCC is calculated using the following method defined by (36).

where \(Y\) is the number of inconsistent pairs and \(X\) is the number of pairs that are in equilibrium with \(p_{{_{k} }}\) and \(q_{{_{k} }}\). Additionally, the MAE and RMSE technique is the most popular error estimation measure. Using (37) and (38), respectively, the RMSE and MAE number are assessed.

Result and discussion

The effectiveness of the proposed NR-IQA model is evaluated in this part using data from the MRI databases for the knee, breast, and brain. The MATLAB R2019a system is used for all of the tests, and the system requirements are 16 GB RAM and an Intel(R) Core (TM) i7-9750 CPU operating at 2.6 GHz.

Dataset description

The MRI knee [32], MRIbrain [33], and MRI breast [34] databases are used in this research for NR-IQA analysis. Training, testing, and validation are conducted to examine the suggested method's effectiveness on the MRI datasets. A 75% training portion, a 15% confirmation portion, and a 10% testing portion make up the collection. The performance study of the suggested model on the training, validation, and test dataset is shown in Table 2. To adjust the SDBFN hyperparameters with the MRSA technique, the proposed NR-IQA model is trained using the training dataset and evaluated on the validation dataset. The learned SDBFN model is also put to the test on the test dataset to determine how effectively it fits.

Performance comparison

The quality of the image metric for a given image is provided by the quality prediction algorithm. The predicted values are contrasted with the mean actual score values to assess the suggested model. The proposed method's and NR-IQA model's effectiveness is assessed using performance measures like SROCC, PLCC, Accuracy, MAE, KROCC, and RMSE. All these performance measures that evaluate the IQA model's effectiveness are computed using the predicted score and mean actual score. The developed model is more precise and more in line with human views, as evidenced by the greater correlation between the projected and mean observed scores. A strong IQA model should have a high correlation and a small mean absolute error with the mean measured score for the predicted quality score. The expected quality score is more closely related to the mean measured score depending on how close the correlation coefficient (SROCC and PLCC) is to 1. Furthermore, the most effective strategy RMSE number ought to be nearer 0. In the present investigation, the suggested model's performance is evaluated in comparison to the performance of the currently available NR-IQA models, including LHL-IQA [24], DF-CNN-IQA [25], DMOS-SVM [26], ENMIQA [23], DNNGP [27], and DeepRPN-BIQA [28]. Figure 4 gives the PLCC value a) MRI knee Dataset, b) MRI brain Dataset and c) MRI breast Dataset.

The comparison in Fig. 4 between the suggested method and existing models for various noise circumstances reveals compelling insights into the effectiveness of the proposed Non-Reference Image Quality Assessment (NR-IQA) method for MRI images. The graphical representation illustrates that the proposed method consistently outperforms conventional methods across different noise variances. Specifically, the proposed method achieves remarkable average Pearson Linear Correlation Coefficient (PLCC) values of 0.99 for the MRI knee dataset, 0.98 for the MRI brain dataset, and 0.981 for the MRI breast dataset across noise variances ranging from 0.2 to 1.0. In contrast, conventional methods demonstrate lower PLCC values for all noise variances. These results underscore the superior performance of the proposed NR-IQA method in accurately assessing image quality in MRI images, highlighting its potential for enhancing diagnostic and analytical processes in medical imaging applications. Figure 5 presents the SROCC value a) MRI knee Dataset, b) MRI brain Dataset and c) MRI breast Dataset.

The analysis extends to the Spearman Rank Order Correlation Coefficient (SROCC) in Fig. 5, which further emphasizes the superiority of the proposed strategy over existing Image Quality Assessment (IQA) methods, particularly in the context of MRI images. The graphical representation reveals that the suggested approach consistently outperforms traditional techniques across varying noise levels. Specifically, the proposed method achieves impressive average SROCC values of 0.991 for the MRI knee dataset, 0.993 for the MRI brain dataset, and 0.981 for the MRI breast dataset at noise levels ranging from 0.2 to 1.0. In contrast, traditional methods yield lower SROCC values for all noise variances. These findings corroborate the overall superior performance of the proposed NR-IQA method, as evidenced by its higher PLCC and SROCC values compared to existing IQA approaches. The results indicate that the proposed method exhibits a stronger correlation with human perception of image quality and demonstrates greater robustness in assessing image quality in the presence of noise, highlighting its potential for enhancing medical imaging applications. Figure 6 shows the KROCC value a) MRI knee Dataset, b) MRI brain Dataset and c) MRI breast Dataset.

Figure 6 presents a comparison of the Kendall Rank Order Correlation Coefficient (KROCC) between the proposed method and traditional techniques across various noise levels, providing further evidence of the proposed method's superior performance in Image Quality Assessment (IQA) for MRI images. The graphical representation indicates that the proposed method consistently outperforms traditional techniques across different noise levels. Specifically, the proposed method achieves mean KROCC values of 0.989 for the MRI knee dataset, 0.91 for the MRI brain dataset, and 0.95 for the MRI breast dataset at noise levels ranging from 0.2 to 1.0. In contrast, conventional methods yield lower KROCC values for all noise variations. These results demonstrate the robustness and reliability of the proposed NR-IQA method in assessing image quality in MRI images, highlighting its potential to enhance diagnostic and analytical processes in medical imaging applications. Figure 7 presents the RMSE value a) MRI knee Dataset, b) MRI brain Dataset and c) MRI breast Dataset.

The RMSE number for the proposed model and the existing approaches are displayed in Fig. 7. The graphical depiction shows that the proposed strategy has mean RMSE values of 0.0021 for the MRI knee dataset, 0.015 for the MRI brain dataset, and 0.025 for the MRI breast dataset at noise levels between 0.2 and 1.0. This satisfies the requirements for a successful NR-IQA model. The proposed model, therefore, beats the other systems. It should be noted that the proposed method can locate different kinds of noise associated with images. This shows that the extracted texture and structural data are the main characteristics used to differentiate between high-quality and distorted pictures. This combination of extracted features produces an exact, high-quality figure that closely matches how people view things. The RMSE values are positive and zero. Generally, a lower RMSE value improves system performance. Figure 8 gives the MAE value a) MRI knee Dataset, b) MRI brain Dataset and c) MRI breast Dataset.

The MAE value obtained from the proposed approach compared with the existing methods at altering noise level is illustrated in Fig. 8. The graphical representation demonstrates that, for the MRI knee dataset, 0.02 mean MAE values, for the MRI brain dataset, 0.09 mean MAE values, and for the MRI breast dataset, 0.098 mean MAE values are obtained for the suggested approach at noise levels between 0.2 and 1.0. The analysis shows that the proposed method has achieved significantly less MAE value than the earlier models. The conventional LHL-IQA [24], DF-CNN-IQA [25], DMOS-SVM [26], ENMIQA [23], DNNGP [27], and DeepRPN-BIQA [28] method has obtained high error value than the developed model. This shows the adequate performance of the proposed method in the NR-IQA function. The statistical analysis is found in this research. However, the quality of MRI scanner images are improved with the hybrid filter method and classify the quality of images as high or low with the effective performance. A summary of the proposed method performance is shown in Table 3.

Discussions

Our research presents a novel approach to No-Reference Image Quality Assessment (NR-IQ) in MRI images by integrating several established techniques to create a robust and efficient framework. By combining the Gray Level Run Length Matrix (GLRLM) and EfficientNet-B7 for feature extraction with the Multi-Objective Reptile Search Algorithm (MRSA) for optimal feature selection, and employing the Self-evolving Deep Belief Fuzzy Neural Network (SDBFN) for analysis, we leverage the complementary strengths of these methods. This integration not only addresses the limitations of existing methods but also achieves significant improvements in performance metrics, including a 20% enhancement in the Pearson Linear Correlation Coefficient (PLCC) and a 12% reduction in Root Mean Square Error (RMSE). Our approach also demonstrates effectiveness across various MRI datasets, with mean Mean Absolute Error (MAE) values of 0.02 for the MRI knee dataset, 0.09 for the MRI brain dataset, and 0.098 for the MRI breast dataset. This comprehensive methodology sets a new benchmark in NR-IQ assessment, highlighting its potential for advancing the field of MRI image quality analysis.

Conclusion

This article proposes a hybrid approach based on NR-IQA with an optimization method, leveraging artificial intelligence. Without a reference image, this hybrid combo enhances the clarity of any particular medical image. Using a hybrid mix of the suggested systems, the primary goal of this article is to enhance MRI scanner image quality without using a reference image. This study's proposed GLRLM and EfficientNet B7 networks derived features from distorted MRI images by training several images. MRSA primarily increases the quality factor by selecting the most optimal image from the GLRLM and EfficientNet B7 network. With the suggested strategy's help, the system can better distinguish between high- and low-quality images. It also offers high precision, simple computation, versatility, and other benefits. Some of the metrics of the suggested approach, including quality factor, SROCC, PLCC, KROCC, MAE, and RMSE, can be computed using an MRI medical image database. In addition, the quality number is 20% higher than it is under the existing approach. When compared to other techniques currently in use, the PLCC parameter show superior features. When compared to the existing methods, the RMSE number decreased by 12%. Further research would focus on multiple kinds of images and advanced artificial intelligence-based methods for NR-IQA.

Future scope

Future research could explore enhancing the hybrid AI model by incorporating more advanced deep learning architectures such as transformers or graph neural networks to further improve feature extraction and prediction accuracy. Additionally, investigating the scalability of the proposed model across larger datasets and different types of MRI images could provide insights into its robustness and generalizability. Exploring the integration of domain-specific knowledge or additional modalities, such as functional MRI (fMRI) or diffusion tensor imaging (DTI), could extend the application of the model to broader clinical contexts. Moreover, conducting user studies and clinical trials to validate the model's performance in real-world scenarios would be crucial steps towards its adoption in clinical practice, potentially aiding radiologists in making more accurate diagnostic decisions.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Abbreviations

- IQA:

-

Image Quality Assessment

- MRI:

-

Magnetic Resonance Images

- NR-IQA:

-

No-Reference Image Quality Assessment

- AI:

-

Artificial Intelligence

- GLRLM:

-

Gray Level Run Length Matrix

- SDBFN:

-

Self-Evolving Deep Belief Fuzzy Neural Network

- RMSE:

-

Root Mean Square Error

- SROCC:

-

Spearman Rank Order Correlation Coefficient

- KROCC:

-

Kendall Rank Order Correlation Coefficient

- MAE:

-

Mean Absolute Error

- NR:

-

No-Reference

- MRSA:

-

Multi-Objective Reptile Search Algorithm

- RPN:

-

Region Proposal Network

- TSPR:

-

Two-Sided Pseudo Reference

- LRE:

-

Long Run Emphasis

- RLN:

-

Run Length Non-Uniformity

- GLN:

-

Gray Level Non-Uniformity

- RP:

-

Run Percentage

- HGRE:

-

High Gray Level Run Emphasis

- ReLu:

-

Rectifier Linear Unit

References

Alseelawi, N., Hazim, H.T. and Salim ALRikabi, H.T., 2022. A Novel Method of Multimodal Medical Image Fusion Based on Hybrid Approach of NSCT and DTCWT. Int J Online Biomed Eng. 18(3). https://doi.org/10.3991/ijoe.v18i03.28011

Ali S, Zhou F, Bailey A, Braden B, East JE, Lu X, Rittscher J. A deep learning framework for quality assessment and restoration in video endoscopy. Med Image Anal. 2021;68:101900. https://doi.org/10.1016/j.media.2020.101900.

Kumar A, Kumar A, Lingam V, Ashok J. Face detection using curvelet transform and PCA. Int J Pure Appl Math. 2018;119:1565–75. https://doi.org/10.1109/ICPR.2008.4760972.

Ding K, Ma K, Wang S, Simoncelli EP. Comparison of full-reference image quality models for optimization of image processing systems. Int J Comput Vision. 2021;129:1258–81. https://doi.org/10.1007/s11263-020-01419-7.

Zhang W, Ma K, Zhai G, Yang X. Uncertainty-aware blind image quality assessment in the laboratory and wild. IEEE Trans Image Process. 2021;30:3474–86. https://doi.org/10.1109/TIP.2021.3061932.

Geleijnse G, Veder LL, Hakkesteegt MM, Metselaar RM. The objective measurement and subjective perception of flexible ENT endoscopes’ image quality. J Image Sci Technol. 2022;66(3):030508–11.

Setiadi DRIM. PSNR vs SSIM: imperceptibility quality assessment for image steganography. Multimedia Tools and Applications. 2021;80(6):8423–44. https://doi.org/10.1007/s11042-020-10035-z.

Baig, M.A., Moinuddin, A.A. and Khan, E., 2019, November. PSNR of highest distortion region: an effective image quality assessment method. In 2019 International Conference on Electrical, Electronics and Computer Engineering (UPCON) (pp. 1–4). IEEE. https://doi.org/10.1109/UPCON47278.2019.8980171

Sara U, Akter M, Uddin MS. Image quality assessment through FSIM, SSIM, MSE and PSNR—a comparative study. Journal of Computer and Communications. 2019;7(3):8–18. https://doi.org/10.4236/jcc.2019.73002.

Kaplan S, Zhu YM. Full-dose PET image estimation from low-dose PET image using deep learning: a pilot study. J Digit Imaging. 2019;32(5):773–8. https://doi.org/10.1007/s10278-018-0150-3.

Kuo, T.Y., Wei, Y.J. and Wan, K.H., 2019, July. Color image quality assessment based on VIF. In 2019 3rd International Conference on Imaging, Signal Processing and Communication (ICISPC) (pp. 96–100). IEEE. https://doi.org/10.1109/ICISPC.2019.8935651

Jiang TX, Ng MK, Zhao XL, Huang TZ. Framelet representation of tensor nuclear norm for third-order tensor completion. IEEE Trans Image Process. 2020;29:7233–44. https://doi.org/10.1109/TIP.2020.3000349.

Loh WT, Bong DB. A generalized quality assessment method for natural and screen content images. IET Image Proc. 2021;15(1):166–79. https://doi.org/10.1049/ipr2.12016.

Zheng X, Jiang G, Yu M, Jiang H. Segmented spherical projection-based blind omnidirectional image quality assessment. IEEE Access. 2020;8:31647–59. https://doi.org/10.1109/ACCESS.2020.2972158.

Ferroukhi M, Ouahabi A, Attari M, Habchi Y, Taleb-Ahmed A. Medical video coding based on 2nd-generation wavelets: Performance evaluation. Electronics. 2019;8(1):88. https://doi.org/10.3390/electronics8010088.

Nizami IF, Rehman MU, Majid M, Anwar SM. Natural scene statistics model independent no-reference image quality assessment using patch based discrete cosine transform. Multimedia Tools and Applications. 2020;79:26285–304. https://doi.org/10.1007/s11042-020-09229-2.

Hammouche, R., Attia, A., Akhrouf, S. and Akhtar, Z., 2022. Gabor filter bank with deep autoencoder based face recognition system. Expert Systems with Applications, p.116743. https://doi.org/10.1016/j.eswa.2022.116743

Abdel-Hamid L. Retinal image quality assessment using transfer learning: Spatial images vs. wavelet detail subbands. Ain Shams Eng J. 2021;12(3):2799–807. https://doi.org/10.1016/j.asej.2021.02.010.

Varga D. No-reference video quality assessment based on the temporal pooling of deep features. Neural Process Lett. 2019;50(3):2595–608. https://doi.org/10.1007/s11063-019-10036-6.

Fantini I, Yasuda C, Bento M, Rittner L, Cendes F, Lotufo R. Automatic MR image quality evaluation using a Deep CNN: A reference-free method to rate motion artifacts in neuroimaging. Comput Med Imaging Graph. 2021;90:101897. https://doi.org/10.1016/j.compmedimag.2021.101897.

Czajkowska J, Juszczyk J, Piejko L, Glenc-Ambroży M. High-frequency ultrasound dataset for deep learning-based image quality assessment. Sensors. 2022;22(4):1478. https://doi.org/10.3390/s22041478.

Ryu J. Improved Image Quality Assessment by Utilizing Pre-Trained Architecture Features with Unified Learning Mechanism. Appl Sci. 2023;13(4):2682. https://doi.org/10.3390/app13042682.

Varga D. No-reference image quality assessment with convolutional neural networks and decision fusion. Appl Sci. 2021;12(1):101. https://doi.org/10.3390/app12010101.

Shanmugam A, Devi SR. Objective Edge Similarity Metric for denoising applications in MR images. Biocybernetics and Biomedical Engineering. 2020;40(1):574–82. https://doi.org/10.1016/j.bbe.2020.01.012.

Shanmugam A, Devi SR. A fuzzy model for noise estimation in magnetic resonance images. IRBM. 2020;41(5):261–6. https://doi.org/10.1016/j.irbm.2019.11.005.

Rajevenceltha J, Gaidhane VH. An efficient approach for no-reference image quality assessment based on statistical texture and structural features. Engineering Science and Technology, an International Journal. 2022;30:101039. https://doi.org/10.1016/j.jestch.2021.07.002.

Bagade JV, Singh K, Dandawate YH. No-reference image quality assessment based on distortion specific and natural scene statistics based parameters: a hybrid approach. Malaysian J Comp Sci. 2019;32(1):31–46. https://doi.org/10.22452/mjcs.vol32no1.3.

Obuchowicz R, Oszust M, Bielecka M, Bielecki A, Piórkowski A. Magnetic resonance image quality assessment by using non-maximum suppression and entropy analysis. Entropy. 2020;22(2):220. https://doi.org/10.3390/e22020220.

Chan KY, Lam HK, Jiang H. A genetic programming-based convolutional neural network for image quality evaluations. Neural Comput Appl. 2022;34(18):15409–27. https://doi.org/10.1007/s00521-022-07218-0.

Rehman M, Nizami IF, Majid M. DeepRPN-BIQA: Deep architectures with region proposal network for natural-scene and screen-content blind image quality assessment. Displays. 2022;71:102101. https://doi.org/10.1016/j.displa.2021.102101.

Hu J, Wang X, Shao F, Jiang Q. TSPR: Deep network-based blind image quality assessment using two-side pseudo reference images. Digital Signal Processing. 2020;106:102849. https://doi.org/10.1016/j.dsp.2020.102849.

Desai AD, Schmidt AM, Rubin EB, Sandino CM, Black MS, Mazzoli V, Stevens KJ, Boutin R, Ré C, Gold GE, Hargreaves BA. Skm-tea: A dataset for accelerated mri reconstruction with dense image labels for quantitative clinical evaluation. arXiv preprint. 2022;18(5):20–6. https://doi.org/10.34028/iajit/18/5/3.

Jain, P. and Santhanalakshmi, S., 2022, October. Early Detection of Brain Tumor and Survival Prediction Using Deep Learning and An Ensemble Learning from Radiomics Images. In 2022 IEEE 3rd Global Conference for Advancement in Technology (GCAT) (pp. 1–9). IEEE. https://doi.org/10.1109/GCAT55367.2022.9971932

Witowski J, Heacock L, Reig B, Kang SK, Lewin A, Pysarenko K, Patel S, Samreen N, Rudnicki W, Łuczyńska E, Popiela T. Improving breast cancer diagnostics with deep learning for mri. Sci Transl Med. 2022;14(664):eabo4802. https://doi.org/10.1126/scitranslmed.abo4802.

Mohanty S, Dakua SP. Toward computing cross-modality symmetric non-rigid medical image registration. IEEE Access. 2022;10:24528–39.

Natarajan SK, Rathinasabapathy R, Narayanasamy J. Biometric user authentication system via fingerprints using novel hybrid optimization tuned deep learning strategy. Traitement du Signal. 2023;40(1):375–81. https://doi.org/10.18280/ts.400138.

Dakua SP. LV segmentation using stochastic resonance and evolutionary cellular automata. Int J Pattern Recognit Artif Intell. 2015;29(03):1557002.

Esfahani SS, Zhai X, Chen M, Amira A, Bensaali F, AbiNahed J, Dakua S, Younes G, Baobeid A, Richardson RA, Coveney PV. Lattice-Boltzmann interactive blood flow simulation pipeline. Int J Comput Assist Radiol Surg. 2020;15:629–39.

Zhai X, Chen M, Esfahani SS, Amira A, Bensaali F, Abinahed J, Dakua S, Richardson RA, Coveney PV. Heterogeneous system-on-chip-based Lattice-Boltzmann visual simulation system. IEEE Syst J. 2019;14(2):1592–601.

Zhai X, Amira A, Bensaali F, Al-Shibani A, Al-Nassr A, El-Sayed A, Eslami M, Dakua SP, Abinahed J. Zynq SoC based acceleration of the lattice Boltzmann method. Concurrency and Computation: Practice and Experience. 2019;31(17):e5184.

Han Z, Jian M, Wang GG. ConvUNeXt: An efficient convolution neural network for medical image segmentation. Knowl-Based Syst. 2022;253:109512.

Ansari MY, Mohanty S, Mathew SJ, Mishra S, Singh SS, Abinahed J, Al-Ansari A, Dakua SP. Towards developing a lightweight neural network for liver CT segmentation. In: International Conference on Medical Imaging and Computer-Aided Diagnosis. Singapore: Springer Nature Singapore; 2022. p. 27–35.

Jafari M, Auer D, Francis S, Garibaldi J, Chen X. DRU-Net: an efficient deep convolutional neural network for medical image segmentation. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). IEEE; 2020. p. 1144–8.

Ansari MY, Mangalote IAC, Masri D, Dakua SP. Neural network-based fast liver ultrasound image segmentation. In: 2023 international joint conference on neural networks (IJCNN). IEEE; 2023. p. 1–8.

Xie Y, Zhang J, Shen C, Xia Y. Cotr: Efficiently bridging cnn and transformer for 3d medical image segmentation. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part III 24. Springer International Publishing; 2021. p. 171–80.

Ansari MY, Abdalla A, Ansari MY, Ansari MI, Malluhi B, Mohanty S, Mishra S, Singh SS, Abinahed J, Al-Ansari A, Balakrishnan S. Practical utility of liver segmentation methods in clinical surgeries and interventions. BMC Med Imaging. 2022;22(1):97.

Akhtar Y, Dakua SP, Abdalla A, Aboumarzouk OM, Ansari MY, Abinahed J, Elakkad MSM, Al-Ansari A. Risk assessment of computer-aided diagnostic software for hepatic resection. IEEE transactions on radiation and plasma medical sciences. 2021;6(6):667–77.

Rai P, Ansari MY, Warfa M, Al-Hamar H, Abinahed J, Barah A, Dakua SP, Balakrishnan S. Efficacy of fusion imaging for immediate post-ablation assessment of malignant liver neoplasms: A systematic review. Cancer Med. 2023;12(13):14225–51.

Ansari MY, Mangalote IAC, Meher PK, Aboumarzouk O, Al-Ansari A, Halabi O, Dakua SP. Advancements in Deep Learning for B-Mode Ultrasound Segmentation: A Comprehensive Review. IEEE Transactions on Emerging Topics in Computational Intelligence. 2024.

Ansari MY, Qaraqe M, Righetti R, Serpedin E, Qaraqe K. Unveiling the future of breast cancer assessment: a critical review on generative adversarial networks in elastography ultrasound. Front Oncol. 2023;13:1282536.

Chandrasekar V, Ansari MY, Singh AV, Uddin S, Prabhu KS, Dash S, Al Khodor S, Terranegra A, Avella M, Dakua SP. Investigating the use of machine learning models to understand the drugs permeability across placenta. IEEE Access. 2023;11:52726–39.

Ansari MY, Chandrasekar V, Singh AV, Dakua SP. Re-routing drugs to blood brain barrier: A comprehensive analysis of machine learning approaches with fingerprint amalgamation and data balancing. IEEE Access. 2022;11:9890–906.

Ansari MY, Qaraqe M, Charafeddine F, Serpedin E, Righetti R, Qaraqe K. Estimating age and gender from electrocardiogram signals: A comprehensive review of the past decade. Artificial Intelligence in Medicine. 2023. p. 102690.

Ansari MY, Qaraqe M. Mefood: A large-scale representative benchmark of quotidian foods for the middle east. IEEE Access. 2023;11:4589–601.

Ansari MY, Qaraqe M, Righetti R, Serpedin E, Qaraqe K. Enhancing ECG-based heart age: impact of acquisition parameters and generalization strategies for varying signal morphologies and corruptions. Frontiers in Cardiovascular Medicine. 2024;11:1424585.

Acknowledgements

The authors express sincere appreciation to the anonymous reviewers whose comments helped to improve the quality of the manuscript.

Funding

This research did not received funding from any source.

Author information

Authors and Affiliations

Contributions

The manuscript was written through the contributions of all authors. Kavitha K.V.N and Ashok Shanmugam: Conceptualization, Methodology, Software; Ashok Shanmugam and Prianka Ramachandran Radhabai: Data curation, Writing – Original draft preparation; Kavitha K.V.N, Ashok Shanmugam and Agbotiname Lucky Imoize: Investigation; Kavitha K.V.N: Supervision; Prianka Ramachandran Radhabai, Ashok Shanmugam and Agbotiname Lucky Imoize: Writing-Reviewing and Editing. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Ethical approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Radhabai, P.R., KVN, K., Shanmugam, A. et al. An effective no-reference image quality index prediction with a hybrid Artificial Intelligence approach for denoised MRI images. BMC Med Imaging 24, 208 (2024). https://doi.org/10.1186/s12880-024-01387-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-024-01387-1